%matplotlib inline

%load_ext rpy2.ipython

from apiclient.discovery import build

from apiclient.errors import HttpError

from oauth2client.tools import argparser

# Set DEVELOPER_KEY to the API key value from the APIs & auth > Registered apps

# tab of

# https://cloud.google.com/console

# Please ensure that you have enabled the YouTube Data API for your project.

DEVELOPER_KEY = "AIzaSyBfV1CYhferAUl0UzrH9-_Fv3eFHlIqM3M"

YOUTUBE_API_SERVICE_NAME = "youtube"

YOUTUBE_API_VERSION = "v3"

youtube = build(YOUTUBE_API_SERVICE_NAME, YOUTUBE_API_VERSION, developerKey=DEVELOPER_KEY)

The rpy2.ipython extension is already loaded. To reload it, use: %reload_ext rpy2.ipython

%%R

# Load R libraries silently in slide not shown

library(dplyr)

library(ggplot2)

library(reshape2)

library(stringr)

Data Analysis Examples

Meetup #4

Data Analysis Examples

- YouTube Video Ranking

- Bayesian Rankings vs ML Rankings

- Bayesian Rankings vs ML Rankings

- Caribbean Crime Forecasting

- Hierarchical Modeling

- Gaussian Processes

- Paint By Numbers

- Mixture Models

- Infinite Mixture Models

- Putting it all together

Ranking YouTube Videos

- Collect some data

- Determine the best ones by looking at which are "liked" with the highest probability

Done. Maybe?

def get_videos(query, max_results):

response = youtube.search().list(

q=query,

part="id,snippet",

maxResults=max_results,

type="video"

).execute()

videos = {}

for result in response.get("items", []):

if result["id"]["kind"] == "youtube#video":

videos[result['id']['videoId']] = result['snippet']['title']

return videos

def get_statistics(videos):

response = youtube.videos().list(

id=','.join(videos.keys()),

part="id,statistics",

maxResults=len(videos)

).execute()

res = []

for stat in response.get('items', []):

vstat = {'id': stat['id'], 'title': videos[stat['id']]}

vstat.update(stat['statistics'])

res.append(vstat)

return pd.DataFrame(res).set_index('id').convert_objects(convert_numeric=True)

Get Some Data¶

videos = get_videos('office+pranks', 50)

stats = get_statistics(videos)[['title', 'likeCount', 'dislikeCount']]

stats.head(15)

| title | likeCount | dislikeCount | |

|---|---|---|---|

| id | |||

| q4360lNoW7A | The Dental Office [FULL] - Pranks and Hidden C... | 54 | 17 |

| TEpB8JZ8SWg | Moosejaw's Office Pranks | 6 | 0 |

| hiW1pDzWG-s | The Office Jim and Dwight pranks 1080p | 50 | 5 |

| 9G9vgT8bEb0 | Office Prank Revenge! - office pranks | 2633 | 223 |

| gmxQSwwTRqU | [FULL] Japanese Dinosaur Prank | 2298 | 87 |

| U1PHpkdvNOs | Gareth's Stapler - The Office - BBC | 1203 | 71 |

| jihMdGOXO3E | How to Do the Autocorrect Prank | Office Pranks | 71 | 5 |

| q0XdthbOkMU | Best Office Prank Ever | 13063 | 1898 |

| cY1vzC62ZWo | How to Do the Mayo Donut Prank | Office Pranks | 48 | 6 |

| tbSaenItxNU | Mother of all Pranks | Fake cops raid company ... | 1836 | 154 |

| wDqYcvFKlFU | Day 4: Office Pranks | 2 | 0 |

| fYq2_fu30tI | Cousin Sal's Surprise Ice Bucket Challenge | 2798 | 62 |

| OcIXOFnYlyQ | Dinosaur Prank Made in Japan T Rex Fail !! | 1186 | 97 |

| jtrjY8Yoor0 | Office Pranks and Shenanigans at ITS HQ | 300 | 18 |

| 4aViH-dpU2I | EN - Max Payne 3 - s4 - Office pranks! | 3 | 1 |

Rank by likeCount Ratio¶

stats['totalVotes'] = stats['likeCount'] + stats['dislikeCount']

stats['p'] = stats['likeCount'].astype(np.float64) / stats['totalVotes']

stats.sort(['p', 'totalVotes'], ascending=False).head(25)

| title | likeCount | dislikeCount | totalVotes | p | |

|---|---|---|---|---|---|

| id | |||||

| zN7KrZuIZbI | The office - Jim's best pranks | 17 | 0 | 17 | 1.000000 |

| e6eqdhjFeMg | How To: Office Pranks by Moosejaw | 15 | 0 | 15 | 1.000000 |

| d1w8-jqZCbs | Rise Guys Off Air 7/10/15 Office pranks, takin... | 11 | 0 | 11 | 1.000000 |

| TEpB8JZ8SWg | Moosejaw's Office Pranks | 6 | 0 | 6 | 1.000000 |

| klbHdec-V1I | Office Pranks | Super Gluing Desk Drawers | Ti... | 6 | 0 | 6 | 1.000000 |

| wDqYcvFKlFU | Day 4: Office Pranks | 2 | 0 | 2 | 1.000000 |

| 7yVAVDkyUFs | Dwight Schrute's Desk // Jim Vs Dwight Pranks ... | 289 | 1 | 290 | 0.996552 |

| WDxeQ4G1ZSw | Cousin Sal's No-Prank Prank | 3833 | 27 | 3860 | 0.993005 |

| xLxHtBt2jtU | Asian Jim // Jim Vs Dwight // The Office US | 3566 | 28 | 3594 | 0.992209 |

| fYq2_fu30tI | Cousin Sal's Surprise Ice Bucket Challenge | 2798 | 62 | 2860 | 0.978322 |

| -UACtIzxv_U | 6 Harmless Office Pranks | 14403 | 383 | 14786 | 0.974097 |

| glFrp-CmNVA | Stapler in Jelo // Jim Vs Dwight Pranks // The... | 398 | 11 | 409 | 0.973105 |

| W2o5JhF38Aw | WKUK - Office Pranks | 3499 | 101 | 3600 | 0.971944 |

| 8kvbtbfAq8I | 10 Best April Fools Pranks | 22511 | 663 | 23174 | 0.971390 |

| QCgDzUtLkCA | Japanese Dinosaur Prank | 3026 | 106 | 3132 | 0.966156 |

| xLyh_y5c0-A | 12 Evil Pranks Taken To The Next Level (Photos) | 21027 | 754 | 21781 | 0.965383 |

| gmxQSwwTRqU | [FULL] Japanese Dinosaur Prank | 2298 | 87 | 2385 | 0.963522 |

| 4xQb9Kl-O3E | LG Ultra HD 84" TV PRANK (METEOR EXPLODES DURI... | 21424 | 814 | 22238 | 0.963396 |

| uq9F9h-kuIw | Air Horn + Office Chair Prank (Extended Cut) -... | 49 | 2 | 51 | 0.960784 |

| PDzinoEspBI | Office prank gone wrong! | 17785 | 782 | 18567 | 0.957882 |

| t5SLR1qLu-4 | 10 OFFICE PRANKS!! - HOW TO PRANK | 18083 | 841 | 18924 | 0.955559 |

| M4ML7jFV8NA | Office Prank - Making an Office Disappear. | 341 | 17 | 358 | 0.952514 |

| U1PHpkdvNOs | Gareth's Stapler - The Office - BBC | 1203 | 71 | 1274 | 0.944270 |

| jtrjY8Yoor0 | Office Pranks and Shenanigans at ITS HQ | 300 | 18 | 318 | 0.943396 |

| JgNJciPuCdI | Best Pranks Compilation | 5664 | 393 | 6057 | 0.935116 |

videos = stats.copy()

videos['title'] = videos['title'].str[:64]

videos = videos.rename(columns={'likeCount': 'likes', 'dislikeCount':'dislikes', 'totalVotes': 'n'})

videos = videos.reset_index()

videos.head()

| id | title | likes | dislikes | n | p | |

|---|---|---|---|---|---|---|

| 0 | q4360lNoW7A | The Dental Office [FULL] - Pranks and Hidden C... | 54 | 17 | 71 | 0.760563 |

| 1 | TEpB8JZ8SWg | Moosejaw's Office Pranks | 6 | 0 | 6 | 1.000000 |

| 2 | hiW1pDzWG-s | The Office Jim and Dwight pranks 1080p | 50 | 5 | 55 | 0.909091 |

| 3 | 9G9vgT8bEb0 | Office Prank Revenge! - office pranks | 2633 | 223 | 2856 | 0.921919 |

| 4 | gmxQSwwTRqU | [FULL] Japanese Dinosaur Prank | 2298 | 87 | 2385 | 0.963522 |

from IPython.lib.display import YouTubeVideo

YouTubeVideo('xLxHtBt2jtU', 800, 600)

Video Ranking Model

What we're really doing:

$$p_i \text{ = Probability that an individual viewer will like video }i\text{,}$$$$L_i \text{ = # of Likes for video }i\text{,}$$$$D_i \text{ = # of Dislikes for video }i$$$$n_i = L_i + D_i$$Model Realistic? Sort of.

Maximum Likelihood Estimate for $p_i$ = $\frac{L_i}{n_i}$

Good estimate? Yea, but not when the sample size (i.e. $n_i$) is small (e.g. 1/1, 2/2, 0/1)

How can we model the probability of a like but somehow account for sample size?¶

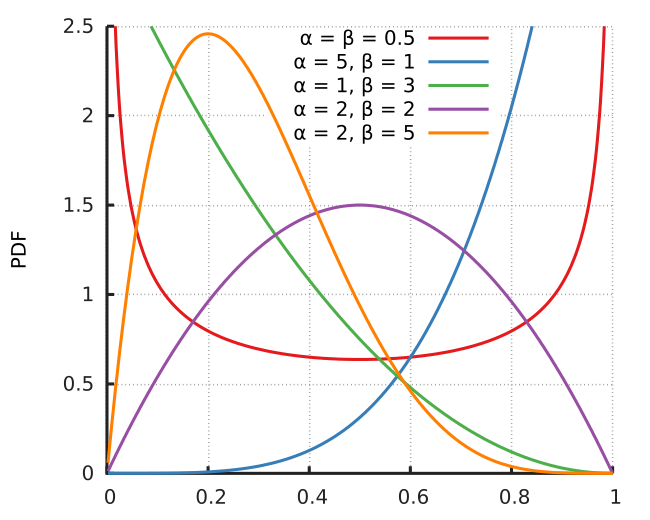

The Beta Distribution

It's only defined on 0 to 1 and has two parameters, plenty enough to manipulate the shape to be what we want.

Probability Density Function: $$ p(x) = \frac{x^{\alpha - 1}(1 - x)^{\beta - 1}}{B(\alpha, \beta)},$$ where $B$ is the Beta Function

Making up a Solution

Let's just say this because why not:

- $\alpha$ parameter in $B$ distribution = # video likes

- $\beta$ parameter in $B$ distribution = # video dislikes

What do these distributions look like for videos with the same like / dislike ratio?

Say 4 videos had the following stats:

| Video | Likes | Dislikes | Ratio |

|---|---|---|---|

| 1 | 9 | 3 | 9/3 = 3 |

| 2 | 15 | 5 | 15/5 = 3 |

| 3 | 48 | 16 | 48/16 = 3 |

| 4 | 600 | 200 | 600/200 = 3 |

%%R -w 800 -h 400 -u px

n <- 10000

rbind(

data.frame(name='Video 1 - Beta(9, 3)', value=rbeta(n,6,2)),

data.frame(name='Video 2 - Beta(15, 5)', value=rbeta(n,15,5)),

data.frame(name='Video 3 - Beta(48, 16)', value=rbeta(n,48,16)),

data.frame(name='Video 4 - Beta(600, 200)', value=rbeta(n,600,200))

) %>%

ggplot(aes(x=value)) + geom_density() + xlab('p') +

facet_wrap(~name, ncol=2, scale="free_y") +

ggtitle('Beta Distribution for Each Video') +

theme_bw()

%%R -w 800 -h 400 -u px

n <- 10000

rbind(

data.frame(name='Video 1 - Beta(9, 3)', value=rbeta(n,9,3), lo=qbeta(.05,9,3), mean=9/12),

data.frame(name='Video 2 - Beta(15, 5)', value=rbeta(n,15,5), lo=qbeta(.05,15,5), mean=15/20),

data.frame(name='Video 3 - Beta(48, 16)', value=rbeta(n,48,16), lo=qbeta(.05,48,16), mean=48/64),

data.frame(name='Video 4 - Beta(600, 200)', value=rbeta(n,600,200), lo=qbeta(.05,600,200), mean=600/800)

) %>%

ggplot(aes(x=value)) + geom_density() + xlab('p') +

facet_wrap(~name, ncol=2, scale="free_y") +

ggtitle('Beta Distribution for Each Video') +

geom_vline(aes(xintercept=lo), color='red', linetype=2) +

geom_vline(aes(xintercept=mean), color='black') +

theme_bw()

How to get there?¶

BEFORE we see any data, let's say we estimate $p_i$ to be something flat like this:

ax = pd.Series(np.repeat(1, 100), np.linspace(0, 1, 100)).plot()

ax.set_xlabel('$p_i$')

ax.set_ylabel('$Density$')

ax.set_title('Beta(1, 1) Distribution')

<matplotlib.text.Text at 0x117a548d0>

Bayes Theorem¶

Given a prior belief in $p_i$ AND some data $D$, what should be our new belief as to what $p_i$ is?

Luckily there are some rules that will help:

In English:

$$= \frac{Pr( \text{X likes and Y dislikes given a value for }p_i) \cdot Pr(p_i\text{in the first place})}{\text{the sum of all possible values for the numerator}} $$

The bayesian lingo:

Example¶

- Say our data, $D$, was 2 likes and 1 dislike

- Also say the we want to calculate an approximation to the posterior for $p \in [.1, .5, .9]$

| p | Pr(D|p) | Pr(p) | Pr(D|p) * Pr(p) |

|---|---|---|---|

| .1 | 3 * .1^2 * (1-.1) = 0.027 | 1 | 0.027 |

| .5 | 3 * .5^2 * (1-.5) = 0.375 | 1 | 0.375 |

| .9 | 3 * .9^2 * (1-.9) = 0.243 | 1 | 0.243 |

marginal - The sum of all "likelihood * prior" values = 0.027 + 0.375 + 0.243 = 0.645

Posterior Estimate¶

Divide last column above by sum (over same column) to get posterior estimate:

| p | Pr(D|p) * Pr(p) / marginal | Posterior: Pr(p|D) |

|---|---|---|

| .1 | 0.027 / 0.645 | 0.042 |

| .5 | 0.375 / 0.645 | 0.581 |

| .9 | 0.243 / 0.645 | 0.377 |

Back to Theory¶

The "likelihood" or $Pr(D|p)$ above is technically from a binomial distribution

The mass function of this distribution is:

$$P(k, n) \sim \textstyle {n \choose k}\, p^k (1-p)^{n-k}$$

- $k$ is the number of likes in our case

- $n$ is the number of likes + number of dislikes (ie votes)

- $p$ is a current estimate for the actual $p_i$ (ie the probability of getting a like)

Recall the "prior" was from a Beta distribution with density function:

$$P(p) = p^{\alpha - 1}(1 - p)^{\beta - 1}/B(\alpha, \beta)$$

- $p$ is probability of getting a like

- Our prior used so far was $Beta(1, 1)$ but in general, you can have any prior like $Beta(\alpha, \beta)$

Posteriors¶

- The posterior actually looks like this then:

- Given the above things, plugging it all together gives (but leaving out the sum/integral on the bottom):

The Punchline¶

Through algrebra and magic, the posterior actually reduces to another BETA distribution. The final form is:

$$ Pr(p_i|L_i,D_i,n_i) = \text{Beta}(\alpha + L_i, \beta + D_i)$$

If our prior was uniform (ie Beta(1, 1)), what is the distribution of $p_i$ if a video has 5 likes and 2 dislikes?

$$ p_1 \sim \text{Beta}(1 + 5, 1 + 2) = \text{Beta}(6, 3)$$

And for one with 50 likes and 20 dislikes?

$$ p_2 \sim \text{Beta}(1 + 50, 1 + 20) = \text{Beta}(51, 21)$$

%%R -u px -h 400 -w 1200

data.frame(x=seq(0, 1, .01)) %>%

mutate('5.likes'=dbeta(x, 6, 3), '50.likes'=dbeta(x, 51, 21)) %>%

melt(id.vars='x') %>% ggplot(aes(x=x, y=value, color=variable)) + geom_line() + theme_bw()

Applying Bayesian Rank to Data¶

- Apply the model above to our YouTube videos and see how that changes the list:

from scipy.stats import beta

print('Top 10 Videos by Bayesian Rank:')

videos['bayes.p'] = videos.apply(lambda x: beta.ppf(.5, x['likes'] + 1, x['dislikes'] + 1), axis=1)

videos.sort(['bayes.p', 'n'], ascending=False).head(10)

Top 10 Videos by Bayesian Rank:

| id | title | likes | dislikes | n | p | bayes.p | |

|---|---|---|---|---|---|---|---|

| 38 | 7yVAVDkyUFs | Dwight Schrute's Desk // Jim Vs Dwight Pranks ... | 287 | 1 | 288 | 0.996528 | 0.994199 |

| 23 | WDxeQ4G1ZSw | Cousin Sal's No-Prank Prank | 3798 | 27 | 3825 | 0.992941 | 0.992769 |

| 36 | xLxHtBt2jtU | Asian Jim // Jim Vs Dwight // The Office US | 3536 | 28 | 3564 | 0.992144 | 0.991959 |

| 12 | fYq2_fu30tI | Cousin Sal's Surprise Ice Bucket Challenge | 2779 | 62 | 2841 | 0.978177 | 0.977952 |

| 34 | -UACtIzxv_U | 6 Harmless Office Pranks | 14390 | 382 | 14772 | 0.974140 | 0.974097 |

| 26 | W2o5JhF38Aw | WKUK - Office Pranks | 3498 | 101 | 3599 | 0.971937 | 0.971762 |

| 15 | 8kvbtbfAq8I | 10 Best April Fools Pranks | 22439 | 661 | 23100 | 0.971385 | 0.971358 |

| 29 | glFrp-CmNVA | Stapler in Jelo // Jim Vs Dwight Pranks // The... | 393 | 11 | 404 | 0.972772 | 0.971213 |

| 37 | wsnFKAdi2wo | Best Of Just For Laughs Gags - Funniest Awkwar... | 11218 | 380 | 11598 | 0.967236 | 0.967182 |

| 18 | QCgDzUtLkCA | Japanese Dinosaur Prank | 3026 | 106 | 3132 | 0.966156 | 0.965957 |

print('Top 10 Videos by Maximum Likelihood Rank:')

videos.sort(['p', 'n'], ascending=False).head(10)

Top 10 Videos by Maximum Likelihood Rank:

| id | title | likes | dislikes | n | p | bayes.p | |

|---|---|---|---|---|---|---|---|

| 17 | e6eqdhjFeMg | How To: Office Pranks by Moosejaw | 15 | 0 | 15 | 1.000000 | 0.957603 |

| 43 | zN7KrZuIZbI | The office - Jim's best pranks | 12 | 0 | 12 | 1.000000 | 0.948078 |

| 30 | d1w8-jqZCbs | Rise Guys Off Air 7/10/15 Office pranks, takin... | 11 | 0 | 11 | 1.000000 | 0.943874 |

| 1 | TEpB8JZ8SWg | Moosejaw's Office Pranks | 6 | 0 | 6 | 1.000000 | 0.905724 |

| 24 | klbHdec-V1I | Office Pranks | Super Gluing Desk Drawers | Ti... | 6 | 0 | 6 | 1.000000 | 0.905724 |

| 11 | wDqYcvFKlFU | Day 4: Office Pranks | 2 | 0 | 2 | 1.000000 | 0.793701 |

| 38 | 7yVAVDkyUFs | Dwight Schrute's Desk // Jim Vs Dwight Pranks ... | 287 | 1 | 288 | 0.996528 | 0.994199 |

| 23 | WDxeQ4G1ZSw | Cousin Sal's No-Prank Prank | 3798 | 27 | 3825 | 0.992941 | 0.992769 |

| 36 | xLxHtBt2jtU | Asian Jim // Jim Vs Dwight // The Office US | 3536 | 28 | 3564 | 0.992144 | 0.991959 |

| 12 | fYq2_fu30tI | Cousin Sal's Surprise Ice Bucket Challenge | 2779 | 62 | 2841 | 0.978177 | 0.977952 |

%%R -u px -w 800 -h 400

data.frame(x=seq(.7, 1, .005)) %>%

mutate('Top.Bayes.Video'=dbeta(x, 287, 2), 'Top.ML.Video'=dbeta(x, 16, 1)) %>%

melt(id.vars=c('x')) %>%

mutate(rank=ifelse(variable == 'Top.Bayes.Video',

qbeta(.05, 287 + 1, 1 + 1),

qbeta(.05, 16 + 1, 0 + 1)

)) %>% ggplot(aes(x=x, y=value)) + geom_line() +

facet_wrap(~variable, ncol=1, scales='free') +

geom_vline(aes(xintercept=rank), linetype=2, color='red') +

ggtitle('Top Bayes-Ranked Video vs Top ML-Ranked Video') +

theme_bw()

# Spreadsheet taken from https://www.unodc.org/documents/gsh/data/GSH2013_Homicide_count_and_rate.xlsx

crime_file = '/Users/eczech/repos/portfolio/demonstrative/python/notebooks/meetups/data_analysis_examples/meetup_pres_files/country_homicides.csv'

crime_data = pd.io.parsers.read_csv(crime_file, delimiter=',', header='infer')

crime_data = crime_data.convert_objects(convert_numeric=True)

# Fill in missing every-other cells

cty = crime_data['Country']

crime_data['Country'] = cty.where(~cty.isnull(), cty.shift(1))

crime_data = crime_data.drop(['Region', 'Subregion', 'Code', 'Source'], axis=1).set_index(['Type', 'Country'])

crime_data.index.name ='Category'

crime_data.columns.name = 'Year'

| Year | 2000 | 2001 | 2002 | 2003 | 2004 | 2005 | 2006 | 2007 | 2008 | 2009 | 2010 | 2011 | 2012 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Type | Country | |||||||||||||

| Rate | Anguilla | 9.0 | 0.0 | 17.1 | 16.6 | 8.1 | 7.9 | 31.0 | 30.4 | 7.5 | NaN | NaN | NaN | NaN |

| Count | Anguilla | 1.0 | 0.0 | 2.0 | 2.0 | 1.0 | 1.0 | 4.0 | 4.0 | 1.0 | NaN | NaN | NaN | NaN |

| Rate | Antigua and Barbuda | 6.4 | 8.9 | 6.2 | 6.2 | 4.9 | 3.6 | 13.2 | 20.1 | 18.7 | 18.5 | 6.9 | NaN | 11.2 |

| Count | Antigua and Barbuda | 5.0 | 7.0 | 5.0 | 5.0 | 4.0 | 3.0 | 11.0 | 17.0 | 16.0 | 16.0 | 6.0 | NaN | 10.0 |

| Rate | Aruba | NaN | NaN | 5.3 | 4.1 | 2.0 | 12.0 | 9.9 | 5.9 | 4.9 | 3.9 | 3.9 | NaN | NaN |

Raw Caribben Homicide Data¶

- Data taken from a study done by the United Nations Office on Drug and Crime (in 2013)

- No data present past 2013, and a lot of it is missing for other years in some countries too

- This is almost definitely the most reputable, up-to-date source of data like this out there

What does this mean for 2015?

# crime_data = .. load from csv and do same basic cleanup ..

crime_data.loc['Rate'].head()

| Year | 2000 | 2001 | 2002 | 2003 | 2004 | 2005 | 2006 | 2007 | 2008 | 2009 | 2010 | 2011 | 2012 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Country | |||||||||||||

| Anguilla | 9.0 | 0.0 | 17.1 | 16.6 | 8.1 | 7.9 | 31.0 | 30.4 | 7.5 | NaN | NaN | NaN | NaN |

| Antigua and Barbuda | 6.4 | 8.9 | 6.2 | 6.2 | 4.9 | 3.6 | 13.2 | 20.1 | 18.7 | 18.5 | 6.9 | NaN | 11.2 |

| Aruba | NaN | NaN | 5.3 | 4.1 | 2.0 | 12.0 | 9.9 | 5.9 | 4.9 | 3.9 | 3.9 | NaN | NaN |

| Bahamas | 24.9 | 14.2 | 16.8 | 15.8 | 13.6 | 15.8 | 18.2 | 22.8 | 21.0 | 24.5 | 26.1 | 34.7 | 29.8 |

| Barbados | 7.5 | 9.3 | 9.3 | 12.2 | 8.1 | 10.6 | 12.7 | 9.8 | 9.0 | 6.8 | 11.1 | 9.6 | 7.4 |

plt_data = crime_data.T['Rate'].reset_index()

%%R -i plt_data -w 800 -h 300 -u px

plt_data %>%

mutate_each(funs(as.numeric), -Year) %>%

melt(id.vars='Year', variable.name='Country', value.name='Homicide.Rate') %>%

ggplot(aes(x=Year, y=Country, fill=Homicide.Rate)) + geom_tile()

%%R -i plt_data -w 1000 -h 400 -u px

plt_data %>%

mutate_each(funs(as.numeric), -Year) %>%

mutate(Year = as.integer(as.character(Year))) %>%

melt(id.vars='Year', variable.name='Country', value.name='Homicide.Rate') %>%

group_by(Country) %>% do({ x <- .;

x$Has.Missing <- ifelse(sum(is.na(x$Homicide.Rate)) > 0, 'Has Missing Data', 'No Missing Data'); x

}) %>%

ggplot(aes(x=Year, y=Homicide.Rate, color=Country)) + geom_line() +

facet_wrap(~Has.Missing, nrow=2) + theme_bw() +

scale_x_continuous(breaks=2000:2012)

# on why population per area is a good idea to predict crime rates: http://theipti.org/wp-content/uploads/2012/02/covariance.pdf

file = '/Users/eczech/repos/portfolio/demonstrative/R/meetups/data_analysis_examples/data/crime_data.csv'

crime_data.loc['Rate'].reset_index().to_csv(file, index=False)

Switch to R¶

Mixture Models¶

Mixture Model Definition¶

- Assume there are 4 multivariate, 2 dimensional normal distributions $N_i, i \in [1, 4]$

The "probability" of any point $x$ above occuring is:

$$ Pr(x) = \sum_1^4{\gamma_i} * N_i(x|\mu_i, \Sigma_i)$$- $\gamma_i$ - The probability of a point being in a cluster in the first place (think # of points in that cluster by # points total)

- $\mu_i$ - The middle of cluster $i$

- $\Sigma_i$ - The correlation between x and y in cluster $i$

from pbn import operations as ops

from pbn import conversions as convert

from pbn import functions as fxn

from scipy import ndimage

ROOT_IMG_DIR = '/Users/eczech/repos/portfolio/demonstrative/python/notebooks/paint_by_numbers/images/'

load_image = lambda f: matimg.imread(ROOT_IMG_DIR+f)

Making a Paint By Numbers¶

- Images are really just arrays

- 2 dimensional arrays with an R, G, and B color value at each location

- So really 3 dimensional

An example:

import matplotlib.image as matimg

img_rgb = matimg.imread(ROOT_IMG_DIR + 'pbn_raw.png')

print('Overall shape: ', img_rgb.shape)

print('The first item in the array is: ', img_rgb[0,0,:])

plt.imshow(img_rgb)

plt.gcf().set_size_inches((16,12))

Overall shape: (900, 723, 3) The first item in the array is: [ 0. 0.32549021 0.57647061]

img_lab = convert.rgb_to_lab(img_rgb)

alpha = .004

img_df = ops.unravel(img_lab)

img_df[['l', 'a', 'b']] = img_df[['l', 'a', 'b']] * alpha

Convert Raw Image¶

- Need to put into "LAB" space instead of RGB

- Also need to flatten into a data frame

img_df = ops.unravel(convert.rgb_to_lab(img_rgb)) * .004

img_df.head()

| x | y | l | a | b | |

|---|---|---|---|---|---|

| 0 | 0 | 0.000 | 0.138338 | 0.017247 | -0.164449 |

| 1 | 0 | 0.004 | 0.246500 | -0.027042 | -0.161878 |

| 2 | 0 | 0.008 | 0.181279 | -0.009071 | -0.160252 |

| 3 | 0 | 0.012 | 0.181279 | -0.009071 | -0.160252 |

| 4 | 0 | 0.016 | 0.161637 | -0.001464 | -0.158474 |

# Run Mixture Model

from sklearn.cluster import GMM

mm = GMM(n_components=500)

# This predicts the cluster number for each pixel

img_pred = mm.fit(img_df).predict(img_df)

Clusters Inferred¶

Give each "predicted" cluster a random color:

plt.imshow(load_image('pbn_clusters_rand.png'))

plt.gcf().set_size_inches((16,12))

Final Result¶

Getting the edges is a little tricky, but the clustering still does the bulk of the work

img_sol = load_image('pbn_solution.png')

img_out = load_image('pbn_outline.png')

fig = plt.figure()

fig.add_subplot(1, 3, 0)

plt.imshow(img_sol)

fig.add_subplot(1, 3, 2)

plt.imshow(img_out)

fig.add_subplot(1, 3, 1)

plt.imshow(img_rgb)

plt.gcf().set_size_inches((36,24))

Mixture Models in a Real World Setting¶

- Mixtures are often used to infer clusters of behaviors in data from anything:

- Motion Detection - Detecting types of movements from motion sensors

- Political Partisanship - Determining democrat vs republican using survey responses

- Topic Modeling - Determining which documents relate to which known topics

Old News .. who cares, there are better, domain-specific ways to do most of these things anyhow.

Having to know or guess at the number of clusters is a huge downer ..

Dirichlet Process¶

- Defines a "distribution of distributions"

- An infinite mixture model

- A bayesian clustering method (priors can be tuned)

- Important because:

- You don't have to specify the number of clusters

- It can be combined with other probability models in a very natural way (not true of other unbounded clustering algorithms)

Dirichlet Process Applications¶

- Topic Modeling - When you don't know how many topics there are in a corpus of text

- Motion Detection - Determining groups of movements like dancing, running, jumping from motion sensors without knowing they're coming

- MRI Activation Modeling - Determining clusters of brain activation during sensory tasks

- Speaker Diarisation - Figuring out who's speaking in a room with an unknown number of people

- Variable Selection - The "Dirichlet Lasso" for selecting groups of features (as opposed to group lasso)

Combining Hierarchichal Modeling, Infinite Mixtures, and Gaussian Processes:

from scipy.stats import multivariate_normal

n=100

nr=25

res = []

def randc(n):

return np.cov(np.vstack((np.random.normal(size=n), np.random.normal(size=n))).T)

for i in range(3):

c = randc(n)

if i == 0:

mu = 10 * np.sin(np.linspace(0, 2*np.pi, num=n))

elif i == 1:

mu = 20 * np.exp(-np.linspace(0, 3, num=n)) - 10

else:

mu = np.linspace(-10, 10, num=n)

m = multivariate_normal.rvs(mean=mu, cov=c, size=1)

for mi, mv in enumerate(m):

res.append((mi, mv, i, 0))

for j in range(nr):

y = multivariate_normal.rvs(mean=m, cov=randc(n), size=1)

for yi, yv in enumerate(y):

res.append((yi, yv, i, j+1))

res = pd.DataFrame(res, columns=['index', 'value', 'cluster', 'replicate'])

res['is_mean'] = np.where(res['replicate'] > 0, 'No', 'Yes')

res.head()

| index | value | cluster | replicate | is_mean | |

|---|---|---|---|---|---|

| 0 | 0 | 0.173774 | 0 | 0 | Yes |

| 1 | 1 | 0.486019 | 0 | 0 | Yes |

| 2 | 2 | 0.911663 | 0 | 0 | Yes |

| 3 | 3 | 1.146329 | 0 | 0 | Yes |

| 4 | 4 | 2.591406 | 0 | 0 | Yes |

%%R -i res -u px -h 400 -w 1200

res %>% mutate(

index = as.numeric(index),

value = as.numeric(value),

cluster = as.numeric(cluster),

replicate = as.numeric(replicate)

) %>% mutate(id = paste(cluster, replicate, sep='.')) %>%

ggplot(aes(x=index, y=value, color=factor(cluster), group=factor(id), alpha=is_mean)) +

geom_line() + theme_bw() + ggtitle('Clustered Hierarchical Gaussian Processes') +

theme(panel.grid.minor=element_blank(), panel.grid.major=element_blank())

Dirichlet process used on top of hierarchical gaussian process:

Done

source activate research3.3 ipython nbconvert /Users/eczech/repos/users_ericczech/Code/IPython3/meetup_pres.ipynb --to slides mv meetup_pres.slides.html ~/Sites/notebooks/