RTG Command Reference¶

This chapter describes RTG commands with a generic description of parameter options and usage. This section also includes expected operation and output results.

Command line interface (CLI)¶

RTG is installed as a single executable in any system subdirectory where permissions authorize a particular community of users to run the application. RTG commands are executed through the RTG command-line interface (CLI). Each command has its own set of parameters and options described in this section.

Results are organized in results directories defined by command

parameters and settings. The command line shell environment should

include a set of familiar text post-processing tools, such as grep,

awk, or perl. Otherwise, no additional applications such as

databases or directory services are required.

RTG command syntax¶

Usage:

rtg COMMAND [OPTIONS] <REQUIRED>

To run an RTG command at the command prompt (either DOS window or Unix terminal), type the product name followed by the command and all required and optional parameters. For example:

$ rtg format -o human_REF_SDF human_REF.fasta

Typically results are written to output files specified with the -o

option. There is no default filename or filename extension added to

commands requiring specification of an output directory or format.

Many times, unfiltered output files are very large; the built-in

compression option generates block compressed output files with the

.gz extension automatically unless the parameter -Z or --no-gzip

is issued with the command.

Many command parameters require user-supplied information of various types, as shown in the following:

Type |

Description |

|---|---|

DIR, FILE |

File or directory name(s) |

SDF |

Sequence data that has been formatted to SDF |

INT |

Integer value |

FLOAT |

Floating point decimal value |

STRING |

A sequence of characters for comments, filenames, or labels |

REGION |

A genomic region specification (see below) |

Genomic region parameters take one of the following forms:

sequence_name (e.g.:

chr21) corresponds to the entirety of the named sequence.sequence_name:start (e.g.:

chr21:100000) corresponds to a single position on the named sequence.sequence_name:start-end (e.g.:

chr21:100000-110000) corresponds to a range that extends from the specified start position to the specified end position (inclusive). The positions are 1-based.sequence_name:position+length (e.g.:

chr21:100000+10000) corresponds to a range that extends from the specified start position that includes the specified number of nucleotides.sequence_name:position~padding (e.g.:

chr21:100000~10000) corresponds to a range that spans the specified position by the specified amount of padding on either side.

To display all parameters and syntax associated with an RTG command,

enter the command and type --help. For example: all parameters

available for the RTG format command are displayed when rtg format

--help is executed, the output of which is shown below.

Usage: rtg format [OPTION]... -o SDF FILE+

[OPTION]... -o SDF -I FILE

[OPTION]... -o SDF -l FILE -r FILE

Converts the contents of sequence data files (FASTA/FASTQ/SAM/BAM) into the RTG

Sequence Data File (SDF) format.

File Input/Output

-f, --format=FORMAT format of input. Allowed values are [fasta,

fastq, sam-se, sam-pe, cg-fastq, cg-sam]

(Default is fasta)

-I, --input-list-file=FILE file containing a list of input read files (1

per line)

-l, --left=FILE left input file for FASTA/FASTQ paired end

data

-o, --output=SDF name of output SDF

-p, --protein input is protein. If this option is not

specified, then the input is assumed to

consist of nucleotides

-q, --quality-format=FORMAT format of quality data for fastq files (use

sanger for Illumina 1.8+). Allowed values are

[sanger, solexa, illumina]

-r, --right=FILE right input file for FASTA/FASTQ paired end

data

FILE+ input sequence files. May be specified 0 or

more times

Filtering

--duster treat lower case residues as unknowns

--exclude=STRING exclude input sequences based on their name.

If the input sequence contains the specified

string then that sequence is excluded from the

SDF. May be specified 0 or more times

--select-read-group=STRING when formatting from SAM/BAM input, only

include reads with this read group ID

--trim-threshold=INT trim read ends to maximise base quality above

the given threshold

Utility

--allow-duplicate-names disable checking for duplicate sequence names

-h, --help print help on command-line flag usage

--no-names do not include name data in the SDF output

--no-quality do not include quality data in the SDF output

--sam-rg=STRING|FILE file containing a single valid read group SAM

header line or a string in the form

"@RG\tID:READGROUP1\tSM:BACT_SAMPLE\tPL:ILLUMINA"

Required parameters are indicated in the usage display; optional parameters are listed immediately below the usage information in organized categories.

Use the double-dash when typing the full-word command option, as in

--output:

$ rtg format --output human_REF_SDF human_REF.fasta

Commonly used command options provide an abbreviated single-character

version of a full command parameter, indicated with only a single dash,

(Thus --output is the same as specifying the command option with the

abbreviated character -o):

$ rtg format -o human_REF human_REF.fasta

A set of utility commands are provided through the CLI: version,

license, and help. Start with these commands to familiarize yourself

with the software.

The rtg version command invokes the RTG software and triggers the

launch of RTG product commands, options, and utilities:

$ rtg version

It will display the version of the RTG software installed, RAM requirements, for example:

$rtg version

Product: RTG Core 3.5

Core Version: 6236f4e (2014-10-31)

RAM: 40.0GB of 47.0GB RAM can be used by rtg (84%)

License: No license file required

Contact: support@realtimegenomics.com

Patents / Patents pending:

US: 7,640,256, 13/129,329, 13/681,046, 13/681,215, 13/848,653,

13/925,704, 14/015,295, 13/971,654, 13/971,630, 14/564,810

UK: 1222923.3, 1222921.7, 1304502.6, 1311209.9, 1314888.7, 1314908.3

New Zealand: 626777, 626783, 615491, 614897, 614560

Australia: 2005255348, Singapore: 128254

Citation:

John G. Cleary, Ross Braithwaite, Kurt Gaastra, Brian S. Hilbush, Stuart

Inglis, Sean A. Irvine, Alan Jackson, Richard Littin, Sahar

Nohzadeh-Malakshah, Mehul Rathod, David Ware, Len Trigg, and Francisco

M. De La Vega. "Joint Variant and De Novo Mutation Identification on

Pedigrees from High-Throughput Sequencing Data." Journal of

Computational Biology. June 2014, 21(6): 405-419.

doi:10.1089/cmb.2014.0029.

(c) Real Time Genomics Inc, 2014

To see release status of commands you are licensed to use, type rtg license:

$rtg license

License: No license file required

Command name Licensed? Release Level

Data formatting:

format Licensed GA

sdf2fasta Licensed GA

sdf2fastq Licensed GA

Utility:

bgzip Licensed GA

index Licensed GA

extract Licensed GA

sdfstats Licensed GA

sdfsubset Licensed GA

sdfsubseq Licensed GA

mendelian Licensed GA

vcfstats Licensed GA

vcfmerge Licensed GA

vcffilter Licensed GA

vcfannotate Licensed GA

vcfsubset Licensed GA

vcfeval Licensed GA

pedfilter Licensed GA

pedstats Licensed GA

rocplot Licensed GA

version Licensed GA

license Licensed GA

help Licensed GA

To display all commands and usage parameters available to use with your

license, type rtg help:

$ rtg help

Usage: rtg COMMAND [OPTION]...

rtg RTG_MEM=16G COMMAND [OPTION]... (e.g. to set maximum memory use to 16 GB)

Type ``rtg help COMMAND`` for help on a specific command. The

following commands are available:

Data formatting:

format convert a FASTA file to SDF

cg2sdf convert Complete Genomics reads to SDF

sdf2fasta convert SDF to FASTA

sdf2fastq convert SDF to FASTQ

sdf2sam convert SDF to SAM/BAM

Read mapping:

map read mapping

mapf read mapping for filtering purposes

cgmap read mapping for Complete Genomics data

Protein search:

mapx translated protein search

Assembly:

assemble assemble reads into long sequences

addpacbio add Pacific Biosciences reads to an assembly

Variant detection:

calibrate create calibration data from SAM/BAM files

svprep prepare SAM/BAM files for sv analysis

sv find structural variants

discord detect structural variant breakends using discordant reads

coverage calculate depth of coverage from SAM/BAM files

snp call variants from SAM/BAM files

family call variants for a family following Mendelian inheritance

somatic call variants for a tumor/normal pair

population call variants for multiple potentially-related individuals

lineage call de novo variants in a cell lineage

avrbuild AVR model builder

avrpredict run AVR on a VCF file

cnv call CNVs from paired SAM/BAM files

Metagenomics:

species estimate species frequency in metagenomic samples

similarity calculate similarity matrix and nearest neighbor tree

Simulation:

genomesim generate simulated genome sequence

cgsim generate simulated reads from a sequence

readsim generate simulated reads from a sequence

readsimeval evaluate accuracy of mapping simulated reads

popsim generate a VCF containing simulated population variants

samplesim generate a VCF containing a genotype simulated from a population

childsim generate a VCF containing a genotype simulated as a child of two parents

denovosim generate a VCF containing a derived genotype containing de novo variants

samplereplay generate the genome corresponding to a sample genotype

cnvsim generate a mutated genome by adding CNVs to a template

Utility:

bgzip compress a file using block gzip

index create a tabix index

extract extract data from a tabix indexed file

sdfstats print statistics about an SDF

sdfsplit split an SDF into multiple parts

sdfsubset extract a subset of an SDF into a new SDF

sdfsubseq extract a subsequence from an SDF as text

sam2bam convert SAM file to BAM file and create index

sammerge merge sorted SAM/BAM files

samstats print statistics about a SAM/BAM file

samrename rename read id to read name in SAM/BAM files

mapxrename rename read id to read name in mapx output files

mendelian check a multi-sample VCF for Mendelian consistency

vcfstats print statistics from about variants contained within a VCF file

vcfmerge merge single-sample VCF files into a single multi-sample VCF

vcffilter filter records within a VCF file

vcfannotate annotate variants within a VCF file

vcfsubset create a VCF file containing a subset of the original columns

vcfeval evaluate called variants for agreement with a baseline variant set

pedfilter filter and convert a pedigree file

pedstats print information about a pedigree file

avrstats print statistics about an AVR model

rocplot plot ROC curves from vcfeval ROC data files

usageserver run a local server for collecting RTG command usage information

version print version and license information

license print license information for all commands

help print this screen or help for specified command

The help command will only list the commands at GA or beta release level.

To display help and syntax information for a specific command from the command line, type the command and then the –help option, as in:

$ rtg format --help

Note

The following commands are synonymous:

rtg help format and rtg format --help

See also

Refer to Installation and deployment for information about installing the RTG product executable.

Data Formatting Commands¶

format¶

Synopsis:

The format command converts the contents of sequence data files

(FASTA/FASTQ/SAM/BAM) into the RTG Sequence Data File (SDF) format. This

step ensures efficient processing of very large data sets, by organizing

the data into multiple binary files within a named directory. The same

SDF format is used for storing sequence data, whether it be genomic

reference, sequencing reads, protein sequences, etc.

Syntax:

Format one or more files specified from command line into a single SDF:

$ rtg format [OPTION] -o SDF FILE+

Format one or more files specified in a text file into a single SDF:

$ rtg format [OPTION] -o SDF -I FILE

Format mate pair reads into a single SDF:

$ rtg format [OPTION] -o SDF -l FILE -r FILE

Examples:

For FASTA (.fa) genome reference data:

$ rtg format -o maize_reference maize_chr*.fa

For FASTQ (.fq) sequence read data:

$ rtg format -f fastq -q sanger -o h1_reads -l h1_sample_left.fq -r h1_sample_right.fq

Parameters:

File Input/Output |

||

|---|---|---|

|

|

The format of the input file(s). Allowed values are [fasta, fastq, fastq-interleaved, sam-se, sam-pe] (Default is fasta). |

|

|

Specifies a file containing a list of sequence data files (one per line) to be converted into an SDF. |

|

|

The left input file for FASTA/FASTQ paired end data. |

|

|

The name of the output SDF. |

|

|

Set if the input consists of protein. If this option is not specified, then the input is assumed to consist of nucleotides. |

|

|

The format of the quality data for fastq format files. (Use sanger for Illumina1.8+). Allowed values are [sanger, solexa, illumina]. |

|

|

The right input file for FASTA/FASTQ paired end data. |

|

Specifies a sequence data file to be converted into an SDF. May be specified 0 or more times. |

|

Filtering |

||

|---|---|---|

|

Treat lower case residues as unknowns. |

|

|

Exclude individual input sequences based on their name. If the input sequence name contains the specified string then that sequence is excluded from the SDF. May be specified 0 or more times. |

|

|

Set to only include only reads with this read group ID when formatting from SAM/BAM files. |

|

|

Set to trim the read ends to maximise the base quality above the given threshold. |

|

Utility |

||

|---|---|---|

|

Set to disable duplicate name detection. |

|

|

|

Prints help on command-line flag usage. |

|

Do not include sequence names in the resulting SDF. |

|

|

Do not include sequence quality data in the resulting SDF. |

|

|

Specifies a file containing a single valid read group SAM header line or a string in the form |

|

Usage:

Formatting takes one or more input data files and creates a single SDF.

Specify the type of file to be converted, or allow default to FASTA

format. To aggregate multiple input data files, such as when formatting

a reference genome consisting of multiple chromosomes, list all files on

the command line or use the --input-list-file flag to specify a file

containing the list of files to process.

For input FASTA and FASTQ files which are compressed, they must have a

filename extension of .gz (for gzip compressed data) or .bz2 (for

bzip2 compressed data).

When formatting human reference genome data, it is recommended that the

resulting SDF be augmented with chromosome reference metadata, in order

to enable automatic sex-aware features during mapping and variant

calling. The format command will automatically recognize several

common human reference genomes and install a reference configuration

file. If your reference genome is not recognized, a configuration can be

manually adapted from one of the examples provided in the RTG

distribution and installed in the SDF directory. The reference

configuration is described in RTG reference file format.

When using FASTQ input files you must specify the quality format being

used as one of sanger, solexa or illumina. As of Illumina pipeline

version 1.8 and higher, quality values are encoded in Sanger format and

so should be formatted using --quality-format=sanger. Output from

earlier Illumina pipeline versions should be formatted using

--quality-format=illumina for Illumina pipeline versions starting with

1.3 and before 1.8, or --quality-format=solexa for Illumina pipeline

versions less than 1.3.

For FASTQ files that represent paired-end read data, indicate each side

respectively using the --left=FILE and --right=FILE flags.

Sometimes paired-end reads are represented in a single FASTQ file by

interleaving each side of the read. This type of input can be

formatted by specifying fastq-interleaved as the format type.

The mapx command maps translated DNA sequence data against a protein

reference. You must use the -p, --protein flag to format the protein

reference used by mapx.

Use the sam-se format for single end SAM/BAM input files and the

sam-pe format for paired end SAM/BAM input files. Note that if the

input SAM/BAM files are sorted in coordinate order (for example if they

have already been aligned to a reference), it is recommended that they

be shuffled before formatting, so that subsequent mapping is not biased

by processing reads in chromosome order. For example, a BAM file can be

shuffled using samtools collate as follows:

$ samtools collate -uOn 256 reads.bam tmp-prefix >reads_shuffled.bam

And this can be carried out on the fly during formatting using bash process redirection in order to reduce intermediate I/O, for example:

$ rtg format --format sam-pe <(samtools collate -uOn 256 reads.bam temp-prefix) ...

The SDF for a read set can contain a SAM read group which will be

automatically picked up from the input SAM/BAM files if they contain

only one read group. If the input SAM/BAM files contain multiple read

groups you must select a single read group from the SAM/BAM file to

format using the --select-read-group flag or specify a custom read

group with the --sam-rg flag. The --sam-rg flag can also be used to

add read group information to reads given in other input formats. The

SAM read group stored in an SDF will be automatically used during

mapping the reads it contains to provide tracking information in the

output BAM files.

The --trim-threshold flag can be used to trim poor quality read ends

from the input reads by inspecting base qualities from FASTQ input. If

and only if the quality of the final base of the read is less than the

threshold given, a new read length is found which maximizes the overall

quality of the retained bases using the following formula.

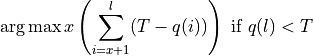

Where l is the original read length, x is the new read length, T is the given threshold quality and q(n) is the quality of the base at the position n of the read.

Note

Sequencing system read files and reference genome files often have the same extension and it may not always be obvious which file is a read set and which is a genome. Before formatting a sequencing system file, open it to see which type of file it is. For example:

$ less pf3.fa

In general, a read file typically begins with an @ or + character; a

genome reference file typically begins with the characters chr.

Normally when the input data contains multiple sequences with the same name the

format command will fail with an error. The --allow-duplicate-names flag

will disable this check conserving memory, but if the input data has multiple

sequences with the same name you will not be warned. Having duplicate sequence

names can cause problems with other commands, especially for reference data

since the output many commands identifies sequences by their names.

sdf2fasta¶

Synopsis:

Convert SDF data into a FASTA file.

Syntax:

$ rtg sdf2fasta [OPTION]... -i SDF -o FILE

Example:

$ rtg sdf2fasta -i humanSDF -o humanFASTA_return

Parameters:

File Input/Output |

||

|---|---|---|

|

|

SDF containing sequences. |

|

|

Output filename (extension added if not present). Use ‘-’ to write to standard output. |

Filtering |

||

|---|---|---|

|

Only output sequences with sequence id less than the given number. (Sequence ids start at 0). |

|

|

Only output sequences with sequence id greater than or equal to the given number. (Sequence ids start at 0). |

|

|

|

Name of a file containing a list of sequences to extract, one per line. |

|

Interpret any specified sequence as names instead of numeric sequence ids. |

|

|

Interpret any specified sequence as taxon ids instead of numeric sequence ids. This option only applies to a metagenomic reference species SDF. |

|

|

Specify one or more explicit sequences to extract, as sequence id, or sequence name if –names flag is set. |

|

Utility |

||

|---|---|---|

|

|

Prints help on command-line flag usage. |

|

Interleave paired data into a single output file. Default is to split to separate output files. |

|

|

|

Set the maximum number of nucleotides or amino acids to print on a line of FASTA output. Should be nonnegative, with a value of 0 indicating that the line length is not capped. (Default is 0). |

|

|

Set this flag to create the FASTA output file without compression. By default the output file is compressed with blocked gzip. |

Usage:

Use the sdf2fasta command to convert SDF data into FASTA format. By

default, sdf2fasta creates a separate line of FASTA output for each

sequence. These lines will be as long as the sequences themselves. To

make them more readable, use the -l, --line-length flag and define a

reasonable record length like 75.

By default all sequences will be extracted, but flags may be specified

to extract reads within a range, or explicitly specified reads (either

by numeric sequence id or by sequence name if --names is set).

Additionally, when the input SDF is a metagenomic species reference SDF,

the --taxons option, any supplied id is interpreted as a taxon id and

all sequences assigned directly to that taxon id will be output. This

provides a convenient way to extract all sequence data corresponding to

a single (or multiple) species from a metagenomic species reference SDF.

Sequence ids are numbered starting at 0, the --start-id flag is an inclusive

lower bound on id and the --end-id flag is an exclusive upper bound. For

example if you have an SDF with five sequences (ids: 0, 1, 2, 3, 4) the

following command:

$ rtg sdf2fasta --start-id=3 -i mySDF -o output

will extract sequences with id 3 and 4. The command:

$ rtg sdf2fasta --end-id=3 -i mySDF -o output

will extract sequences with id 0, 1, and 2. And the command:

$ rtg sdf2fasta --start-id=2 --end-id=4 -i mySDF -o output

will extract sequences with id 2 and 3.

sdf2fastq¶

Synopsis:

Convert SDF data into a FASTQ file.

Syntax:

$ rtg sdf2fastq [OPTION]... -i SDF -o FILE

Example:

$ rtg sdf2fastq -i humanSDF -o humanFASTQ_return

Parameters:

File Input/Output |

||

|---|---|---|

|

|

Specifies the SDF data to be converted. |

|

|

Specifies the file name used to write the resulting FASTQ output. |

Filtering |

||

|---|---|---|

|

Only output sequences with sequence id less than the given number. (Sequence ids start at 0). |

|

|

Only output sequences with sequence id greater than or equal to the given number. (Sequence ids start at 0). |

|

|

|

Name of a file containing a list of sequences to extract, one per line. |

|

Interpret any specified sequence as names instead of numeric sequence ids. |

|

|

Specify one or more explicit sequences to extract, as sequence id, or sequence name if –names flag is set. |

|

Utility |

||

|---|---|---|

|

|

Prints help on command-line flag usage. |

|

|

Set the default quality to use if the SDF does not contain sequence quality data (0-63). |

|

Interleave paired data into a single output file. Default is to split to separate output files. |

|

|

|

Set the maximum number of nucleotides or amino acids to print on a line of FASTQ output. Should be nonnegative, with a value of 0 indicating that the line length is not capped. (Default is 0). |

|

|

Set this flag to create the FASTQ output file without compression. By default the output file is compressed with blocked gzip. |

Usage:

Use the sdf2fastq command to convert SDF data into FASTQ format. If no

quality data is available in the SDF, use the -q, --default-quality

flag to set a quality score for the FASTQ output. The quality encoding

used during output is sanger quality encoding. By default, sdf2fastq

creates a separate line of FASTQ output for each sequence. As with

sdf2fasta, there is an option to use the -l, --line-length flag to

restrict the line lengths to improve readability of long sequences.

By default all sequences will be extracted, but flags may be specified

to extract reads within a range, or explicitly specified reads (either

by numeric sequence id or by sequence name if --names is set).

It may be preferable to extract data to unaligned SAM/BAM format using

sdf2sam, as this preserves read-group information stored in the SDF

and may also be more convenient when dealing with paired-end data.

The --start-id and --end-id flags behave as in sdf2fasta.

sdf2sam¶

Synopsis:

Convert SDF read data into unaligned SAM or BAM format file.

Syntax:

$ rtg sdf2sam [OPTION]... -i SDF -o FILE

Example:

$ rtg sdf2sam -i samplereadsSDF -o samplereads.bam

Parameters:

File Input/Output |

||

|---|---|---|

|

|

Specifies the SDF data to be converted. |

|

|

Specifies the file name used to write the resulting SAM/BAM to. The output format is automatically determined based on the filename specified. If ‘-’ is given, the data is written as uncompressed SAM to standard output. |

Filtering |

||

|---|---|---|

|

Only output sequences with sequence id less than the given number. (Sequence ids start at 0). |

|

|

Only output sequences with sequence id greater than or equal to the given number. (Sequence ids start at 0). |

|

|

|

Name of a file containing a list of sequences to extract, one per line. |

|

Interpret any specified sequence as names instead of numeric sequence ids. |

|

|

Specify one or more explicit sequences to extract, as sequence id, or sequence name if –names flag is set. |

|

Utility |

||

|---|---|---|

|

|

Prints help on command-line flag usage. |

|

|

Set this flag when creating SAM format output to disable compression. By default SAM is compressed with blocked gzip, and BAM is always compressed. |

Usage:

Use the sdf2sam command to convert SDF data into unaligned SAM/BAM

format. By default all sequences will be extracted, but flags may be

specified to extract reads within a range, or explicitly specified reads

(either by numeric sequence id or by sequence name if --names is set).

This command is a useful way to export paired-end data to a single

output file while retaining any read group information that may be

stored in the SDF.

The output format is either SAM/BAM depending on the specified output file name.

e.g. output.sam or output.sam.gz will output as SAM, whereas

output.bam will output as BAM. If neither SAM or BAM format is indicated by

the file name then BAM will be used and the output file name adjusted

accordingly. e.g output will become output.bam. However if standard

output is selected (-) then the output will always be in uncompressed SAM

format.

The --start-id and --end-if behave as in sdf2fasta.

fastqtrim¶

Synopsis:

Trim reads in FASTQ files.

Syntax:

$ rtg fastqtrim [OPTION]... -i FILE -o FILE

Example:

Apply hard base removal from the start of the read and quality-based trimming of terminal bases:

$ rtg fastqtrim -s 12 -E 18 -i S12_R1.fastq.gz -o S12_trimmed_R1.fastq.gz

Parameters:

File Input/Output |

||

|---|---|---|

|

|

Input FASTQ file, Use ‘-’ to read from standard input. |

|

|

Output filename. Use ‘-’ to write to standard output. |

|

|

Quality data encoding method used in FASTQ input files (Illumina 1.8+ uses sanger). Allowed values are [sanger, solexa, illumina] (Default is sanger) |

Filtering |

||

|---|---|---|

|

Discard reads that have zero length after trimming. Should not be used with paired-end data. |

|

|

|

Trim read ends to maximise base quality above the given threshold (Default is 0) |

|

If a read ends up shorter than this threshold it will be trimmed to zero length (Default is 0) |

|

|

|

Trim read starts to maximise base quality above the given threshold (Default is 0) |

|

|

Always trim the specified number of bases from read end (Default is 0) |

|

|

Always trim the specified number of bases from read start (Default is 0) |

Utility |

||

|---|---|---|

|

|

Print help on command-line flag usage. |

|

|

Do not gzip the output. |

|

|

If set, output in reverse complement. |

|

Seed used during subsampling. |

|

|

If set, subsample the input to retain this fraction of reads. |

|

|

|

Number of threads (Default is the number of available cores) |

Usage:

Use fastqtrim to apply custom trimming and preprocessing to raw

FASTQ files prior to mapping and alignment. The format command

contains some limited trimming options, which are applied to all input

files, however in some cases different or specific trimming operations

need to be applied to the various input files. For example, for

paired-end data, different trimming may need to be applied for the left

read files compared to the right read files. In these cases,

fastqtrim should be used to process the FASTQ files first.

The --end-quality-threshold flag can be used to trim poor quality bases

from the ends of the input reads by inspecting base qualities from FASTQ

input. If and only if the quality of the final base of the read is less

than the threshold given, a new read length is found which maximizes the

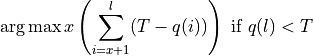

overall quality of the retained bases using the following formula:

where l is the original read length, x is the new read length, T

is the given threshold quality and q(n) is the quality of the base at

the position n of the read. Similarly, --start-quality-threshold

can be used to apply this quality-based thresholding to the start of

reads.

Some of the trimming options may result in reads that have no bases

remaining. By default, these are output as zero-length FASTQ reads,

which RTG commands are able to handle normally. It is also possible to

remove zero-length reads altogether from the output with the

--discard-empty-reads option, however this should not be used when

processing FASTQ files corresponding to paired-end data, otherwise the

pairs in the two files will no longer be matched.

Similarly, when using the --subsample option to down-sample a FASTQ

file for paired-end data, you should specify an explicit randomization

seed via --seed and use the same seed value for the left and right

files.

Formatting with filtering on the fly¶

Running custom filtering with fastqtrim need not mean that

additional disk space is required or that formatting be slowed down due

to additional disk I/O. It is possible when using standard unix shells

to perform the filtering on the fly. The following example demonstrates

how to apply different trimming options to left and right files while

formatting to SDF:

$ rtg format -f fastq -o S12_trimmed.sdf \

-l <(rtg fastqtrim -s 12 -E 18 -i S12_R1.fastq.gz -o -)

-r <(rtg fastqtrim -E 18 -i S12_R2.fastq.gz -o -)

See also

petrim¶

Synopsis:

Trim paired-end read FASTQ files based on read arm alignment overlap.

Syntax:

$ rtg petrim [OPTION]... -l FILE -o FILE -r FILE

Parameters:

File Input/Output |

||

|---|---|---|

|

|

Left input FASTQ file (AKA R1) |

|

|

Output filename prefix. Use ‘-’ to write to standard output. |

|

|

Quality data encoding method used in FASTQ input files (Illumina 1.8+ uses sanger). Allowed values are [sanger, solexa, illumina] (Default is sanger) |

|

|

Right input FASTQ file (AKA R2) |

Sensitivity Tuning |

||

|---|---|---|

|

Aligner indel band width scaling factor, fraction of read length allowed as an indel (Default is 0.5) |

|

|

Penalty for a gap extension during alignment (Default is 1) |

|

|

Penalty for a gap open during alignment (Default is 19) |

|

|

|

Minimum percent identity in overlap to trigger overlap trimming (Default is 90) |

|

|

Minimum number of bases in overlap to trigger overlap trimming (Default is 25) |

|

Penalty for a mismatch during alignment (Default is 9) |

|

|

Soft clip alignments if indels occur INT bp from either end (Default is 5) |

|

|

Penalty for unknown nucleotides during alignment (Default is 5) |

|

Filtering |

||

|---|---|---|

|

If set, discard pairs where both reads have zero length (after any trimming) |

|

|

If set, discard pairs where either read has zero length (after any trimming) |

|

|

Assume R1 starts with probes this long, and trim R2 bases that overlap into this (Default is 0) |

|

|

|

If set, merge overlapping reads at midpoint of overlap region. Result is in R1 (R2 will be empty) |

|

|

If set, trim overlapping reads to midpoint of overlap region. |

|

If a read ends up shorter than this threshold it will be trimmed to zero length (Default is 0) |

|

|

Method used to alter bases/qualities at mismatches within overlap region. Allowed values are [none, zero-phred, pick-best] (Default is none) |

|

|

Assume R2 starts with probes this long, and trim R1 bases that overlap into this (Default is 0) |

|

Utility |

||

|---|---|---|

|

|

Print help on command-line flag usage. |

|

Interleave paired data into a single output file. Default is to split to separate output files. |

|

|

|

Do not gzip the output. |

|

Seed used during subsampling. |

|

|

If set, subsample the input to retain this fraction of reads. |

|

|

|

Number of threads (Default is the number of available cores) |

Usage:

Paired-end read sequencing with read lengths that are long relative to the typical library fragment size can often result in the same bases being sequenced by both arms. This repeated sequencing of bases within the same fragment can skew variant calling, and so it can be advantageous to remove such read overlap.

In some cases, complete read-through can occur, resulting in additional adaptor or non-genomic bases being present at the ends of reads.

In addition, some library preparation methods rely on the ligation of

synthetic probe sequence to attract target DNA, which is subsequently

sequenced. Since these probe bases do not represent genomic material,

they must be removed at some point during the analytic pipeline prior to

variant calling, otherwise they could act as a reference bias when

calling variants. Removal from the primary arm where the probe is

attached is typically easy enough (e.g. via fastqtrim), however in

cases of high read overlap, probe sequence can also be present in the

other read arm.

petrim aligns each read arm against it’s mate with high stringency

in order to identify cases of read overlap. The sensitivity of read

overlap detection is primarily controlled through the use of

--min-identity and --min-overlap-length, although it is also

possible to adjust the penalties used during alignment.

The following types of trimming or merging may be applied.

Removal of non-genomic bases due to complete read-through. This removal is always applied.

Removal of overlap bases impinging into regions occupied by probe bases. For example, if the left arms contain 11-mer probes, using

--left-probe-length=11will result in the removal of any right arm bases that overlap into the first 11 bases of the left arm. Similar trimming is available for situations where probes are ligated to the right arm by using--right-probe-length.Adjustment of mismatching read bases inside areas of overlap. Such mismatches indicate that one or other of the bases has been incorrectly sequenced. Alteration of these bases is selected by supplying the

--mismatch-adjustmentflag with a value ofzero-phredto alter the phred quality score of both bases to zero, orpick-bestto choose whichever base had the higher reported quality score.Removal of overlap regions by trimming both arms back to a point where no overlap is present. An equal number of bases are removed from each arm. This trimming is enabled by specifying

--midpoint-trimand takes place after any read-through or probe related trimming.Merging non-redundant sequence from both reads to create a single read, enabled via

--midpoint-merge. This is like--midpoint-trimwith a subsequent moving of the R2 read onto the end of the the R1 read (thus the R2 read becomes empty).

After trimming or merging it is possible that one or both of the arms of

the pair have no bases remaining, and a strategy is needed to handle

these pairs. The default is to retain such pairs in the output, even if

one or both are zero-length. When both arms are zero-length, the pair

can be dropped from output with the use of --discard-empty-pairs. If

downstream processing cannot handle zero-length reads,

--discard-empty-reads will drop a read pair if either of the arms is

zero-length.

petrim also provides the ability to down-sample a read set by using

the --subsample option. This will produce a different sampling each time,

unless an explicit randomization seed is specified via --seed.

Formatting with paired-end trimming on the fly¶

Running custom filtering with petrim can be done in standard Unix

shells without incurring the use of additional disk space or unduly

slowing down the formatting of reads. The following example demonstrates

how to apply paired-end trimming while formatting to SDF:

$ rtg format -f fastq-interleaved -o S12_trimmed.sdf \

<(rtg petrim -l S12_R1.fastq.gz -r S12_R2.fastq.gz -m -o - --interleaved)

This can even be combined with fastqtrim to provide extremely

flexible trimming:

$ rtg format -f fastq-interleaved -o S12_trimmed.sdf \

<(rtg petrim -m -o - --interleave \

-l <(rtg fastqtrim -s 12 -E 18 -i S12_R1.fastq.gz -o -) \

-r <(rtg fastqtrim -E 18 -i S12_R2.fastq.gz -o -) \

)

Note

petrim currently assumes Illumina paired-end sequencing,

and aligns the reads in FR orientation. Sequencing methods which

produce arms in a different orientation can be processed by first

converting the input files using fastqtrim --reverse-complement,

running petrim, followed by another fastqtrim

--reverse-complement to restore the reads to their original

orientation.

Simulation Commands¶

RTG includes some simulation commands that may be useful for experimenting with effects of various RTG command parameters or when getting familiar with RTG work flows. A simple simulation series might involve the following commands:

$ rtg genomesim --output sim-ref-sdf --min-length 500000 --max-length 5000000 \

--num-contigs 5

$ rtg popsim --reference sim-ref-sdf --output population.vcf.gz

$ rtg samplesim --input population.vcf.gz --output sample1.vcf.gz \

--output-sdf sample1-sdf --reference sim-ref-sdf --sample sample1

$ rtg readsim --input sample1-sdf --output reads-sdf --machine illumina_pe \

-L 75 -R 75 --coverage 10

$ rtg map --template sim-ref-sdf --input reads-sdf --output sim-mapping \

--sam-rg "@RG\tID:sim-rg\tSM:sample1\tPL:ILLUMINA"

$ rtg snp --template sim-ref-sdf --output sim-name-snp sim-mapping/alignments.bam

genomesim¶

Synopsis:

Use the genomesim command to simulate a reference genome, or to create

a baseline reference genome for a research project when an actual genome

reference sequence is unavailable.

Syntax:

Specify number of sequences, plus minimum and maximum lengths:

$ rtg genomesim [OPTION]... -o SDF --max-length INT --min-length INT -n INT

Specify explicit sequence lengths (one more sequences):

$ rtg genomesim [OPTION]... -o SDF -l INT

Example:

$ rtg genomesim -o genomeTest -l 500000

Parameters:

File Input/Output |

||

|---|---|---|

|

|

The name of the output SDF. |

Utility |

||

|---|---|---|

|

Specify a comment to include in the generated SDF. |

|

|

Set the relative frequencies of A,C,G,T in the generated sequence. (Default is 1,1,1,1). |

|

|

|

Prints help on command-line flag usage. |

|

|

Specify the length of generated sequence. May be specified 0 or more times, or as a comma separated list. |

|

Specify the maximum sequence length. |

|

|

Specify the minimum sequence length. |

|

|

|

Specify the number of sequences to generate. |

|

Specify a sequence name prefix to be used for the generated sequences. The default is to name the output sequences ‘simulatedSequenceN’, where N is increasing for each sequence. |

|

|

|

Specify seed for the random number generator. |

Usage:

The genomesim command allows one to create a simulated genome with one

or more contiguous sequences - exact lengths of each contig or number of

contigs with minimum and maximum lengths provided. The contents of an

SDF directory created by genomesim can be exported to a FASTA file

using the sdf2fasta command.

This command is primarily useful for providing a simple randomly generated base genome for use with subsequent simulation commands.

Each generated contig is named by appending an increasing numeric index

to the specified prefix. For example --prefix=chr --num-contigs=10

would yield contigs named chr1 through chr10.

cgsim¶

Synopsis:

Simulate Complete Genomics Inc sequencing reads. Supports the original 35 bp read structure (5-10-10-10), and the newer 29 bp read structure (10-9-10).

Syntax:

Generation by genomic coverage multiplier:

$ rtg cgsim [OPTION]... -V INT -t SDF -o SDF -c FLOAT

Generation by explicit number of reads:

$ rtg cgsim [OPTION]... -V INT -t SDF -o SDF -n INT

Example:

$ rtg cgsim -V 1 -t HUMAN_reference -o CG_3x_readst -c 3

Parameters:

File Input/Output |

||

|---|---|---|

|

|

SDF containing input genome. |

|

|

Name for reads output SDF. |

Fragment Generation |

||

|---|---|---|

|

If set, the user-supplied distribution represents desired abundance. |

|

|

|

Allow reads to be drawn from template fragments containing unknown nucleotides. |

|

|

Coverage, must be positive. |

|

|

File containing probability distribution for sequence selection. |

|

If set, the user-supplied distribution represents desired DNA fraction. |

|

|

|

Maximum fragment size (Default is 500) |

|

|

Minimum fragment size (Default is 350) |

|

Rate that the machine will generate new unknowns in the read (Default is 0.0) |

|

|

|

Number of reads to be generated. |

|

File containing probability distribution for sequence selection expressed by taxonomy id. |

|

Complete Genomics |

||

|---|---|---|

|

|

Select Complete Genomics read structure version, 1 (35 bp) or 2 (29 bp) |

Utility |

||

|---|---|---|

|

Comment to include in the generated SDF. |

|

|

|

Print help on command-line flag usage. |

|

Do not create read names in the output SDF. |

|

|

Do not create read qualities in the output SDF. |

|

|

|

Set the range of base quality values permitted e.g.: 3-40 (Default is fixed qualities corresponding to overall machine base error rate) |

|

File containing a single valid read group SAM header line or a string in the form |

|

|

|

Seed for random number generator. |

Usage:

Use the cgsim command to set either --coverage or --num-reads in

simulated Complete Genomics reads. For more information about Complete

Genomics reads, refer to http://www.completegenomics.com

RTG simulation tools allows for deterministic experiment repetition. The

--seed parameter, for example, allows for regeneration of exact same

reads by setting the random number generator to be repeatable (without

supplying this flag a different set of reads will be generated each

time).

The --distribution parameter allows you to specify the probability

that a read will come from a particular named sequence for use with

metagenomic databases. Probabilities are numbers between zero and one

and must sum to one in the file.

readsim¶

Synopsis:

Use the readsim command to generate single or paired end reads of

fixed or variable length from a reference genome, introducing machine

errors.

Syntax:

Generation by genomic coverage multiplier:

$ rtg readsim [OPTION]... -t SDF --machine STRING -o SDF -c FLOAT

Generation by explicit number of reads:

$ rtg readsim [OPTION]... -t SDF --machine STRING -o SDF -n INT

Example:

$ rtg readsim -t genome_ref -o sim_reads -r 75 --machine illumina_se -c 30

Parameters:

File Input/Output |

||

|---|---|---|

|

|

SDF containing input genome. |

|

Select the sequencing technology to model. Allowed values are [illumina_se, illumina_pe, complete_genomics, complete_genomics_2, 454_pe, 454_se, iontorrent] |

|

|

|

Name for reads output SDF. |

Fragment Generation |

||

|---|---|---|

|

If set, the user-supplied distribution represents desired abundance. |

|

|

|

Allow reads to be drawn from template fragments containing unknown nucleotides. |

|

|

Coverage, must be positive. |

|

|

File containing probability distribution for sequence selection. |

|

If set, the user-supplied distribution represents desired DNA fraction. |

|

|

|

Maximum fragment size (Default is 250) |

|

|

Minimum fragment size (Default is 200) |

|

Rate that the machine will generate new unknowns in the read (Default is 0.0) |

|

|

|

Number of reads to be generated. |

|

File containing probability distribution for sequence selection expressed by taxonomy id. |

|

Illumina PE |

||

|---|---|---|

|

|

Target read length on the left side. |

|

|

Target read length on the right side. |

Illumina SE |

||

|---|---|---|

|

|

Target read length, must be positive. |

454 SE/PE |

||

|---|---|---|

|

Maximum 454 read length (in paired end case the sum of the left and the right read lengths) |

|

|

Minimum 454 read length (in paired end case the sum of the left and the right read lengths) |

|

IonTorrent SE |

||

|---|---|---|

|

Maximum IonTorrent read length. |

|

|

Minimum IonTorrent read length. |

|

Utility |

||

|---|---|---|

|

Comment to include in the generated SDF. |

|

|

|

Print help on command-line flag usage. |

|

Do not create read names in the output SDF. |

|

|

Do not create read qualities in the output SDF. |

|

|

|

Set the range of base quality values permitted e.g.: 3-40 (Default is fixed qualities corresponding to overall machine base error rate) |

|

File containing a single valid read group SAM header line or a string in the form |

|

|

|

Seed for random number generator. |

Usage:

Create simulated reads from a reference genome by either specifying coverage depth or a total number of reads.

A typical use case involves creating a mutated genome by introducing

SNPs or CNVs with popsim and samplesim generating reads from the

mutated genome with readsim, and mapping them back to the original

reference to verify the parameters used for mapping or variant

detection.

RTG simulation tools allows for deterministic experiment repetition. The

--seed parameter, for example, allows for regeneration of exact same

reads by setting the random number generator to be repeatable (without

supplying this flag a different set of reads will be generated each

time).

The --distribution parameter allows you to specify the sequence

composition of the resulting read set, primarily for use with

metagenomic databases. The distribution file is a text file containing

lines of the form:

<probability><space><sequence name>

Probabilities must be between zero and one and must sum to one in the

file. For reference databases containing taxonomy information, where

each species may be comprised of more than one sequence, it is instead

possible to use the --taxonomy-distribution option to specify the

probabilities at a per-species level. The format of each line in this

case is:

<probability><space><taxon id>

When using --distribution or --taxonomy-distribution, the

interpretation must be specified one of --abundance or

--dna-fraction. When using --abundance each specified

probability reflects the chance of selecting the specified sequence

(or taxon id) from the set of sequences, and thus for a given abundance

a large sequence will be represented by more reads in the resulting set

than a short sequence. In contrast, with --dna-fraction each

specified probability reflects the chance of a read being derived

from the designated sequence, and thus for a given fraction, a large

sequence will have a lower depth of coverage than a short sequence.

popsim¶

Synopsis:

Use the popsim command to generate a VCF containing simulated

population variants. Each variant allele generated has an associated

frequency INFO field describing how frequent in the population that

allele is.

Syntax:

$ rtg popsim [OPTION]... -o FILE -t SDF

Example:

$ rtg popsim -o pop.vcf -t HUMAN_reference

Parameters:

File Input/Output |

||

|---|---|---|

|

|

Output VCF file name. |

|

|

SDF containing the reference genome. |

Utility |

||

|---|---|---|

|

|

Print help on command-line flag usage. |

|

|

Do not gzip the output. |

|

Seed for the random number generator. |

|

Usage:

The popsim command is used to create a VCF containing variants with

frequency in population information that can be subsequently used to

simulate individual samples using the samplesim command. The frequency

in population is contained in a VCF INFO field called AF. The types

of variants and the allele-frequency distribution has been drawn from

observed variants and allele frequency distribution in human studies.

See also

samplesim¶

Synopsis:

Use the samplesim command to generate a VCF containing a genotype

simulated from population variants according to allele frequency.

Syntax:

$ rtg samplesim [OPTION]... -i FILE -o FILE -t SDF -s STRING

Example:

From a population frequency VCF:

$ rtg samplesim -i pop.vcf -o 1samples.vcf -t HUMAN_reference -s person1 --sex male

From an existing simulated VCF:

$ rtg samplesim -i 1samples.vcf -o 2samples.vcf -t HUMAN_reference -s person2 \

--sex female

Parameters:

File Input/Output |

||

|---|---|---|

|

|

Input VCF containing population variants. |

|

|

Output VCF file name. |

|

If set, output an SDF containing the sample genome. |

|

|

|

SDF containing the reference genome. |

|

|

Name for sample. |

Utility |

||

|---|---|---|

|

If set, treat variants without allele frequency annotation as uniformly likely. |

|

|

|

Print help on command-line flag usage. |

|

|

Do not gzip the output. |

|

Ploidy to use. Allowed values are [auto, diploid, haploid] (Default is auto) |

|

|

Seed for the random number generator. |

|

|

Sex of individual. Allowed values are [male, female, either] (Default is either) |

|

Usage:

The samplesim command is used to simulate an individuals genotype

information from a population variant frequency VCF generated by the

popsim command or by previous samplesim or childsim commands. The

new output VCF will contain all the existing variants and samples with a

new column for the new sample. The genotype at each record of the VCF

will be chosen randomly according to the allele frequency specified in

the AF field.

If input VCF records do not contain an AF annotation, by default any

ALT allele in that record will not be selected and so the sample will be

genotyped as 0/0. Alternatively for simple simulations the

--allow-missing-af flag will treat each allele in such records as

being equally likely (i.e.: effectively equivalent to AF=0.5 for a

biallelic variant, AF=0.33,0.33 for a triallelic variant, etc).

The ploidy for each genotype is automatically determined according to

the ploidy of that chromosome for the specified sex of the individual,

as defined in the reference genome reference.txt file. For more

information see RTG reference file format. If the reference SDF

does not contain chromosome configuration information, a default ploidy

can be specified using the --ploidy flag.

The --output-sdf flag can be used to optionally generate an SDF of the

individuals genotype which can then be used by the readsim command to

simulate a read set for the individual.

See also

denovosim¶

Synopsis:

Use the denovosim command to generate a VCF containing a derived

genotype containing de novo variants.

Syntax:

$ rtg denovosim [OPTION]... -i FILE --original STRING -o FILE -t SDF -s STRING

Example:

$ rtg denovosim -i sample.vcf --original personA -o 2samples.vcf \

-t HUMAN_reference -s personB

Parameters:

File Input/Output |

||

|---|---|---|

|

|

The input VCF containing parent variants. |

|

The name of the existing sample to use as the original genotype. |

|

|

|

The output VCF file name. |

|

Set to output an SDF of the genome generated. |

|

|

|

The SDF containing the reference genome. |

|

|

The name for the new derived sample. |

Utility |

||

|---|---|---|

|

|

Prints help on command-line flag usage. |

|

|

Set this flag to create the VCF output file without compression. |

|

Set the expected number of de novo mutations per genome (Default is 70). |

|

|

The ploidy to use when the reference genome does not contain a reference text file. Allowed values are [auto, diploid, haploid] (Default is auto) |

|

|

Set the seed for the random number generator. |

|

|

Set this flag to display information regarding de novo mutation points. |

|

Usage:

The denovosim command is used to simulate a derived genotype

containing de novo variants from a VCF containing an existing

genotype.

The output VCF will contain all the existing variants and samples, along

with additional de novo variants. If the original and derived sample

names are different, the output will contain a new column for the

mutated sample. If the original and derived sample names are the same,

the sample in the output VCF is updated rather than creating an entirely

new sample column. When a sample receives a de novo mutation, the

sample DN field is set to “Y”.

If de novo variants were introduced without regard to neighboring

variants, a situation could arise where it is not possible to

unambiguously determine the haplotype of the simulated sample. To

prevent this, denovosim will not output a de novo variant that

overlaps existing variants. Since denovosim chooses candidate de

novo locations before reading the input VCF, this occasionally mandates

skipping a candidate de novo so the target number of mutations may not

always be reached.

The --output-sdf flag can be used to optionally generate an SDF of the

derived genome which can then be used by the readsim command to

simulate a read set for the new genome.

See also

childsim¶

Synopsis:

Use the childsim command to generate a VCF containing a genotype

simulated as a child of two parents.

Syntax:

$ rtg childsim [OPTION]... --father STRING -i FILE --mother STRING -o FILE -t SDF \

-s STRING

Example:

$ rtg childsim --father person1 --mother person2 -i 2samples.vcf -o 3samples.vcf \

-t HUMAN_reference -s person3

Parameters:

File Input/Output |

||

|---|---|---|

|

Name of the existing sample to use as the father. |

|

|

|

Input VCF containing parent variants. |

|

Name of the existing sample to use as the mother. |

|

|

|

Output VCF file name. |

|

If set, output an SDF containing the sample genome. |

|

|

|

SDF containing the reference genome. |

|

|

Name for new child sample. |

Utility |

||

|---|---|---|

|

Probability of extra crossovers per chromosome (Default is 0.01) |

|

|

If set, load genetic maps from this directory for recombination point selection. |

|

|

|

Print help on command-line flag usage. |

|

|

Do not gzip the output. |

|

Ploidy to use. Allowed values are [auto, diploid, haploid] (Default is auto) |

|

|

Seed for the random number generator. |

|

|

Sex of individual. Allowed values are [male, female, either] (Default is either) |

|

|

If set, display information regarding haplotype selection and crossover points. |

|

Usage:

The childsim command is used to simulate an individuals genotype

information from a VCF containing the two parent genotypes generated by

previous samplesim or childsim commands. The new output VCF will

contain all the existing variants and samples with a new column for the

new sample.

The ploidy for each genotype is generated according to the ploidy of

that chromosome for the specified sex of the individual, as defined in

the reference genome reference.txt file. For more information see

RTG reference file format. The generated genotypes are all consistent

with Mendelian inheritance (de novo variants can be simulated with the

denovosim command).

The --output-sdf flag can be used to optionally generate an SDF of the

child’s genotype which can then be used by the readsim command to

simulate a read set for the child.

By default positions for crossover events are chosen according to a

uniform distribution. However, if linkage information is available,

then this can be used to inform the crossover selection procedure.

The expected format for this information is described in

Genetic map directory, and the directory containing the relevant

files can be specified by using the --genetic-map-dir flag.

See also

pedsamplesim¶

Synopsis:

Generates simulated genotypes for all members of a

pedigree. pedsamplesim automatically simulates founder individuals,

inheritance by children, and de novo mutations.

Syntax:

$ rtg pedsamplesim [OPTION]... -i FILE -o DIR -p FILE -t SDF

Example:

$ rtg pedsamplesim -t reference.sdf -p family.ped -i popvars.vcf \

-o family_sim --remove-unused

Parameters:

File Input/Output |

||

|---|---|---|

|

|

Input VCF containing parent variants. |

|

|

Directory for output. |

|

If set, output an SDF for the genome of each simulated sample. |

|

|

|

Genome relationships PED file. |

|

|

SDF containing the reference genome. |

Utility |

||

|---|---|---|

|

Probability of extra crossovers per chromosome (Default is 0.01) |

|

|

If set, load genetic maps from this directory for recombination point selection. |

|

|

|

Print help on command-line flag usage. |

|

|

Do not gzip the output. |

|

Expected number of mutations per genome (Default is 70) |

|

|

Ploidy to use. Allowed values are [auto, diploid, haploid] (Default is auto) |

|

|

If set, output only variants used by at least one sample. |

|

|

Seed for the random number generator. |

|

Usage:

The pedsamplesim uses the methods of samplesim, denovosim,

and childsim to greatly ease the simulation of multiple samples. The

input VCF should contain standard allele frequency INFO annotations that

will be used to simulate genotypes for any sample identified as a

founder. Any samples present in the pedigree that are already present in

the input VCF will not be regenerated. To simulate genotypes for a

subset of the members of the pedigree, use pedfilter to create a

filtered pedigree file that includes only the subset required.

The supplied pedigree file is first examined to identify any individuals that cannot be simulated according to inheritance from other samples in the pedigree. Note that simulation according to inheritance requires both parents to be present in the pedigree. These samples in the pedigree are treated as founder individuals.

Founder individuals are simulated using samplesim, where the

genotypes are chosen according to the allele frequency annotation in the

input VCF.

All newly generated samples may have de novo mutations introduced

according to the --num-mutations setting. As with the denovosim

command, any de novo mutations introduced in a sample will be

genotyped as homozygous reference in other pre-existing samples, and

introduced variants will not overlap any pre-existing variant loci.

Samples that can be simulated according to Mendelian inheritance are

then generated, using childsim. As expected, as well as inheriting

de novo variants from parents, each child may obtain new de novo

mutations of their own.

If the simulated samples will be used for subsequent simulated

sequencing, such as via readsim, it is possible to automatically

output an SDF containing the simulated genome for each sample by

specifying the --output-sdf option, obviating the need to separately

use samplereplay.

By default positions for crossover events are chosen according to a

uniform distribution. However, if linkage information is available,

then this can be used to inform the crossover selection procedure.

The expected format for this information is described in

Genetic map directory, and the directory containing the relevant

files can be specified by using the --genetic-map-dir flag.

samplereplay¶

Synopsis:

Use the samplereplay command to generate the genome SDF corresponding

to a sample genotype in a VCF file.

Syntax:

$ rtg samplereplay [OPTION]... -i FILE -o SDF -t SDF -s STRING

Example:

$ rtg samplereplay -i 3samples.vcf -o child.sdf -t HUMAN_reference -s person3

Parameters:

File Input/Output |

||

|---|---|---|

|

|

Input VCF containing the sample genotype. |

|

|

Name for output SDF. |

|

|

SDF containing the reference genome. |

|

|

Name of the sample to select from the VCF. |

Utility |

||

|---|---|---|

|

|

Print help on command-line flag usage. |

Usage:

The samplereplay command can be used to generate an SDF of a genotype

for a given sample from an existing VCF file. This can be used to

generate a genome from the outputs of the samplesim and childsim

commands. The output genome can then be used in simulating a read set

for the sample using the readsim command.

Every chromosome for which the individual is diploid will have two sequences in the resulting SDF.

Utility Commands¶

bgzip¶

Synopsis:

Block compress a file or decompress a block compressed file. Block

compressed outputs from the mapping and variant detection commands can

be indexed with the index command. They can also be processed with

standard gzip tools such as gunzip and zcat.

Syntax:

$ rtg bgzip [OPTION]... FILE+

Example:

$ rtg bgzip alignments.sam

Parameters:

File Input/Output |

||

|---|---|---|

|

|

The compression level to use, between 1 (least but fast) and 9 (highest but slow) (Default is 5) |

|

|

Decompress. |

|

|

Force overwrite of output file. |

|

If set, do not add the block gzip termination block. |

|

|

|

Write on standard output, keep original files unchanged. Implied when using standard input. |

|

File to (de)compress, use ‘-’ for standard input. Must be specified 1 or more times. |

|

Utility |

||

|---|---|---|

|

|

Print help on command-line flag usage. |

Usage:

Use the bgzip command to block compress files. Files such as VCF, BED,

SAM, TSV must be block-compressed before they can be indexed for fast

retrieval of records corresponding to specific genomic regions.

See also

index¶

Synopsis:

Create tabix index files for block compressed TAB-delimited genome position data files or BAM index files for BAM files.

Syntax:

Multi-file input specified from command line:

$ rtg index [OPTION]... FILE+

Multi-file input specified in a text file:

$ rtg index [OPTION]... -I FILE

Example:

$ rtg index -f sam alignments.sam.gz

Parameters:

File Input/Output |

||

|---|---|---|

|

|

Format of input to index. Allowed values are [sam, bam, cram, sv, coveragetsv, bed, vcf, auto] (Default is auto) |

|

|

File containing a list of block compressed files (1 per line) containing genome position data. |

|

Block compressed files containing data to be indexed. May be specified 0 or more times. |

|

Utility |

||

|---|---|---|

|

|

Print help on command-line flag usage. |

Usage:

Use the index command to produce tabix indexes for block compressed

genome position data files like SAM files, VCF files, BED files, and the

TSV output from RTG commands such as coverage. The index command

can also be used to produce BAM indexes for BAM files with no index.

extract¶

Synopsis:

Extract specified parts of an indexed block compressed genome position data file.

Syntax:

Extract whole file:

$ rtg extract [OPTION]... FILE

Extract specific regions:

$ rtg extract [OPTION]... FILE STRING+

Example:

$ rtg extract alignments.bam 'chr1:2500000~1000'

Parameters:

File Input/Output |

||

|---|---|---|

|

The indexed block compressed genome position data file to extract. |

|

Filtering |

||

|---|---|---|

|

The range to display. The format is one of <sequence_name>, <sequence_name>:<start>-<end>, <sequence_name>:<pos>+<length> or <sequence_name>:<pos>~<padding>. May be specified 0 or more times. |

|

Reporting |

||

|---|---|---|

|

Set to also display the file header. |

|

|

Set to only display the file header. |

|

Utility |

||

|---|---|---|

|

|

Prints help on command-line flag usage. |

Usage:

Use the extract command to view specific parts of indexed block

compressed genome position data files such as those in SAM/BAM/BED/VCF

format.

aview¶

Synopsis:

View read mapping and variants corresponding to a region of the genome, with output as ASCII to the terminal, or HTML.

Syntax:

$ rtg aview [OPTION]... --region STRING -t SDF FILE+

Example:

$ rtg aview -t hg19 -b omni.vcf -c calls.vcf map/alignments.bam \

--region Chr10:100000+3 –padding 30

Parameters:

File Input/Output |

||

|---|---|---|

|

|

VCF file containing baseline variants. |

|

|

BED file containing regions to overlay. May be specified 0 or more times. |

|

|

VCF file containing called variants. May be specified 0 or more times. |

|

|

File containing a list of SAM/BAM format files (1 per line) |

|

|

Read SDF (only needed to indicate correctness of simulated read mappings). May be specified 0 or more times. |

|

|

SDF containing the reference genome. |

|

Alignment SAM/BAM files. May be specified 0 or more times. |

|

Filtering |

||

|---|---|---|

|

|

Padding around region of interest (Default is to automatically determine padding to avoid read truncation) |

|

The region of interest to display. The format is one of <sequence_name>, <sequence_name>:<start>-<end>, <sequence_name>:<pos>+<length> or <sequence_name>:<pos>~<padding> |

|

|

Specify name of sample to select. May be specified 0 or more times, or as a comma separated list. |

|

Reporting |

||

|---|---|---|

|

Output as HTML. |

|

|

Do not use base-colors. |

|

|

Do not use colors. |

|

|

Display nucleotide instead of dots. |

|

|

Print alignment cigars. |

|

|

Print alignment MAPQ values. |

|

|

Print mate position. |

|

|

Print read names. |

|

|

Print read group id for each alignment. |

|

|

Print reference line every N lines (Default is 0) |

|

|

Print sample id for each alignment. |

|

|

Print soft clipped bases. |

|

|

If set, project highlighting for the specified track down through reads (Default projects the union of tracks) |

|

|

Sort reads first on read group and then on start position. |

|

|

Sort reads on start position. |

|

|

Sort reads first on sample id and then on start position. |

|

|

Display unflattened CGI reads when present. |

|

Utility |

||

|---|---|---|

|

|

Print help on command-line flag usage. |

Usage:

Use the aview command to display a textual view of mappings and

variants corresponding to a small region of the reference genome. This

is useful when examining evidence for variant calls in a server

environment where a graphical display application such as IGV is not

available. The aview command is easy to script in order to output

displays for multiple regions for later viewing (either as text or

HTML).

sdfstats¶

Synopsis:

Print statistics that describe a directory of SDF formatted data.

Syntax:

$ rtg sdfstats [OPTION]... SDF+

Example:

$ rtg sdfstats human_READS_SDF

Location : C:\human_READS_SDF

Parameters : format -f solexa -o human_READS_SDF

c:\users\Elle\human\SRR005490.fastq.gz

SDF Version : 6

Type : DNA

Source : SOLEXA

Paired arm : UNKNOWN

Number of sequences: 4193903

Maximum length : 48

Minimum length : 48

N : 931268

A : 61100096

C : 41452181

G : 45262380

T : 52561419

Total residues : 201307344

Quality scores available on this SDF

Parameters:

File Input/Output |

||

|---|---|---|

|

Specifies an SDF on which statistics are to be reported. May be specified 1 or more times. |

|

Reporting |

||

|---|---|---|

|

Set to print out the name and length of each sequence. (Not recommended for read sets). |

|

|

|

Set to include information about unknown bases (Ns) by read position. |

|

|

Set to display mean of quality. |

|

Set to display the reference sequence list for the given sex. Allowed values are [male, female, either]. May be specified 0 or more times, or as a comma separated list. |

|

|

Set to display information about the taxonomy. |

|

|

|

Set to include information about unknown bases (Ns). |

Utility |

||

|---|---|---|

|

|

Prints help on command-line flag usage. |

Usage:

Use the sdfstats command to get information about the contents of

SDFs.

sdfsubset¶

Synopsis:

Extracts a specified subset of sequences from one SDF and outputs them to another SDF.

Syntax:

Individual specification of sequence ids:

$ rtg sdfsubset [OPTION]... -i SDF -o SDF STRING+

File list specification of sequence ids:

$ rtg sdfsubset [OPTION]... -i SDF -o SDF -I FILE

Example:

$ rtg sdfsubset -i reads -o subset_reads 10 20 30 40 50

Parameters:

File Input/Output |

||

|---|---|---|

|

|

Specifies the input SDF. |

|

|

The name of the output SDF. |

Filtering |

||

|---|---|---|

|

Only output sequences with sequence id less than the given number. (Sequence ids start at 0). |

|

|

Only output sequences with sequence id greater than or equal to the given number. (Sequence ids start at 0). |

|

|

|

Name of a file containing a list of sequences to extract, one per line. |

|

Interpret any specified sequence as names instead of numeric sequence ids. |

|

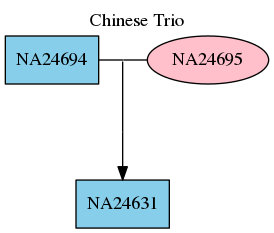

|