COS 426, Spring 2010

NAME, LOGIN

Demonstration of Implemented Features

Art Contest

|

| Szymon Simpson |

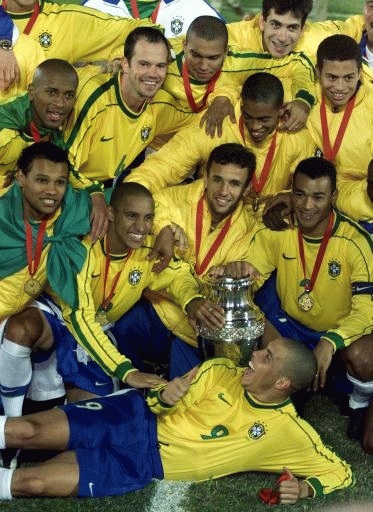

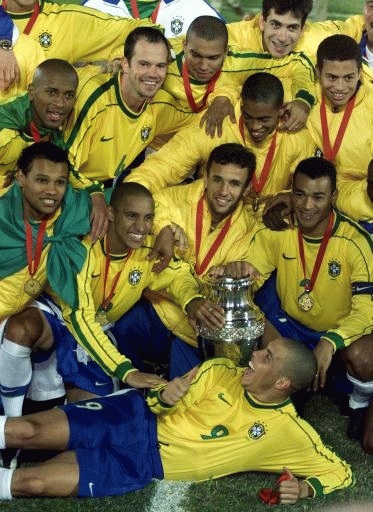

Morphing

|

|

| Source Image |

Destination Image |

|

| Transformation |

Median Filtering

|

|

|

| Input |

Sigma = .2 |

Sigma = .9 |

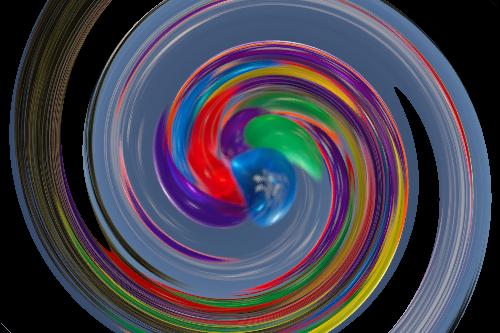

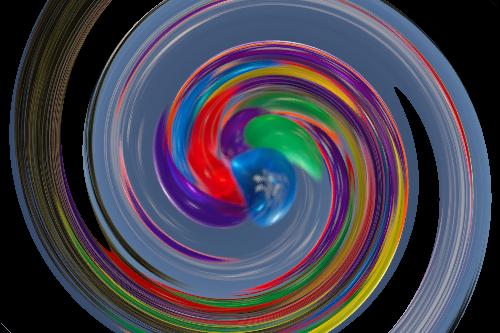

Fun (Swirl)

|

| Input |

|

|

|

| Point Sampling, Angle = 1 |

Gaussian Sampling, Angle = 1 |

Bilinear Interpolation, Angle = 1 |

|

|

|

| Point Sampling, Angle = 10 |

Gaussian Sampling, Angle = 10 |

Bilinear Interpolation, Angle = 10 |

Compositing

|

|

|

|

|

| Top Image |

Top Mask |

Bottom Image |

Bottom Mask |

Result |

Rotate

|

| Input |

|

|

|

| Point Sampling, Angle = 1 |

Gaussian Sampling, Angle = 1 |

Bilinear Interpolation, Angle = 1 |

|

|

|

| Point Sampling, Angle = 2 |

Gaussian Sampling, Angle = 2 |

Bilinear Interpolation, Angle = 2 |

Blur

|

|

|

| Input |

Sigma = 2 |

Sigma = 8 |

Motion Blur

|

|

|

|

| Input |

amount = 10 |

amount = 20 |

amount = 30 |

Dithering

|

| Input |

|

|

|

|

|

| Quantize 1 |

Quantize 2 |

Quantize 3 |

Quantize 4 |

Quantize 5 |

|

|

|

|

|

| Randm Dither 1 |

Randm Dither 2 |

Randm Dither 3 |

Randm Dither 4 |

Randm Dither 5 |

|

|

|

|

|

| Ordered Dither 1 |

Ordered Dither 2 |

Ordered Dither 3 |

Ordered Dither 4 |

Ordered Dither 5 |

|

|

|

|

|

| Floyd-Steinberg 1 |

Floyd-Steinberg 2 |

Floyd-Steinberg 3 |

Floyd-Steinberg 4 |

Floyd-Steinberg 5 |

Scale

|

|

|

|

| Input |

Point Sampling (.5x) |

Gaussian Sampling (.5x) |

Bilinear Interpolation (.5x) |

|

| Point Sampling (1.2x) |

|

| Gaussian Sampling (1.2x) |

|

| Bilinear Interpolation (1.2x) |

Gamma

|

|

|

| Input |

Gamma = .1 |

Gamma = 2 |

Black and White

|

|

| Input |

Black and White |

Edge Detect

|

|

| Input |

Edge Detect |

Sharpen

|

|

| Input |

Sharpen |

Bilateral Interpolation

|

|

|

| Input |

DomainSigma = .7, RangeSigma = .1 |

DomainSigma = 3, RangeSigma = .1 |

Saturation

|

|

|

|

| Saturation = -1 |

Saturation = 0 |

Saturation = 1 |

Saturation = 2 |

Extract Channel

Contrast

|

|

|

|

|

| Input |

Factor = -.5 |

Factor = 0 |

Factor = 1 |

Factor = 3 |

Implementation Notes

Black and White: as specified.

Gamma: as specified.

Saturation: as specified.

Contrast: as specified.

Extract Channel: as specified.

Sharpen: used the 3x3 filter mentioned in class.

Edge Detect: used the 3x3 filter mentioned in class.

Gaussian Blur: used an nxn matrix as a filter kernel where n is determined by sigma (as specified). First added the linear Gaussian to the columns of the filter, then to the rows, then normalized. Then convoluted on every pixel.

Motion Blur: used a linear filter of length amount where filter[i] = i+1 up to amount. Convoluted on each pixel using this filter and then normalized on the fly.

Median Filter: used an nxn filter window where n was determined by sigma (as specified for the Gaussian). Then initialized a linear array of pointers to R2Pixel objects of length n. For each pixel, a pointer to it and its neighbors inside the square window were added to the linear array. Afterwards, the first n*n/2 R2Pixels were sorted by luminance using a simple selection sort, and the n*n/2th pixel was chosen to replace the pixel being examined.

Bilateral Filter: used an nxn Gaussian kernel where n is determined by domainsigma (as specified). Then convoluted on every pixel using the Gaussian kernel and a difference value between the pixel being examined and its neighbors (using luminance) within the Gaussian kernel's window. Then normalized on the fly.

Scale (Point Sampling): initialized a temporary image of different dimensions (based on arguments). Then replaced each pixel in the new image with its corresponding pixel in the original image.

Scale (Gaussian Sampling): same as point sampling except a weighted average of the pixel in the original image and its neighbors was used.

Scale (Bilinear Interpolation): also same as point sampling except a bilinear interpolation between a non-integer point in the original image and the pixels surrounding it was used.

Rotate (Point Sampling): set each pixel in a temporary image to its counterpart in the original image rotated by a given angle.

Rotate (Gaussian Sampling): same as point sampling but set each pixel in the temporary image to a weighted average from the original image.

Rotate (Bilinear Interpolation): same as point sampling but set each pixel in the temporary image to a bilinear interpolation between a non-integer point in the original image and the points surrounding it.

Fun Swirl (Point Sampling): Set each pixel in the target image to its counterpart in the original image rotated by an angle determined by its distance from the origin-- the farter away the pixel from the origin, the more rotation it was given.

Fun Swirl (Gaussian Sampling): same as point sampling except a weighted average was used.

Fun Swirl (Bilinear Interpolation): Same as point sampling but a bilinear interpolation between a non-integer coordinate and ites neighbors was used.

Quantize: subdivided the [0-1] interval into nbits discrete values and snapped pixel colors to these discrete values.

Random Dither: added random noise to the source image before quantizing. Amount of noise added was proportional to 1/nbits. Setting the noise to this seemed to yield good results.

Ordered Dither: applied a 4x4 Bayer's pattern matrix to the image before quantizing.

Floyd-Steinberg Dither: diffused quantization error by calculating it and spreading it to neighboring pixels using the Floyd-Steinberg error diffusion pattern.

Composite: used the over operation discussed in class on each pixel.

Morph: First interpolated between the source segments and the target segments using the time step and then applied the Beier morphing algorithm to produce a new source image. Then interpolated between the target segments and the source segments (the other way) using the time step and used Beier's morphing algorithm to produce a new destination image. Then layered the new source and destination images on top of one another using an over operation with alpha = time step (cross-fade).

Feedback

- How long did you spend on this assignment?

- A while but not an insanely unreasonable amount of time. I honestly could have done it but I wanted to get everything to be as good possible.

- Was it too hard, too easy, or just right?

- If it were any easier I wouldn't know as much as I do now. Requiring three types of sampling for rotation and fun seemed a little much though, especially since it's almost exactly the same code as for scaling.

- What was the best part of the assignment?

- Morphing professor Rusinkiewicz's face to Homer Simpson's.

- What was the worst part of the assignment?

- I didn't know that converting an R2Segment to an R2Vector using the Vector() function returned a UNIT vector as opposed to one with the same length as the segment. It caused me a lot of trouble but I was really happy when I figured it out so it's fine. More specific comments in the helper classes would be nice though :)

- How could it be improved for next year?

- The instructions on how to use the -composite option are messed up. It should say:

-composite file:bottom_mask file:top_image file:top_mask int:operation(0=over)\n

Other than that I thought the assignment was very rewarding and pretty enjoyable.